An Analysis of RF Transfer Learning Behavior Using Synthetic Data

Paper and Code

Oct 03, 2022

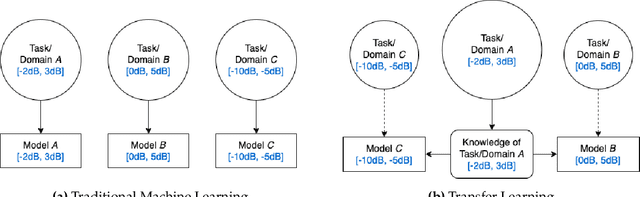

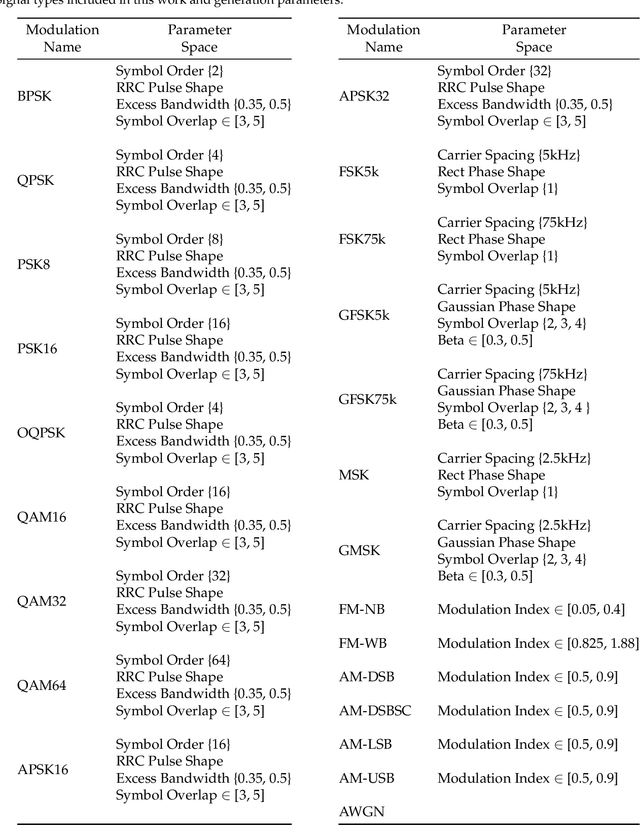

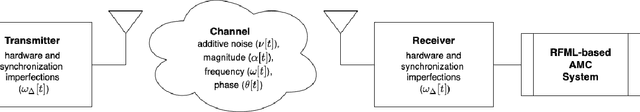

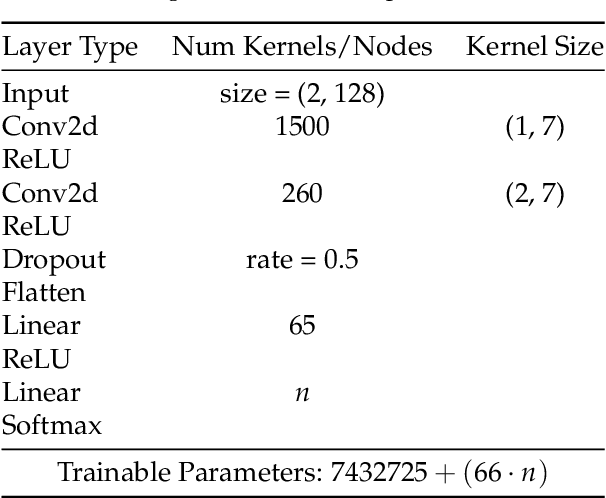

Transfer learning (TL) techniques, which leverage prior knowledge gained from data with different distributions to achieve higher performance and reduced training time, are often used in computer vision (CV) and natural language processing (NLP), but have yet to be fully utilized in the field of radio frequency machine learning (RFML). This work systematically evaluates how radio frequency (RF) TL behavior by examining how the training domain and task, characterized by the transmitter/receiver hardware and channel environment, impact RF TL performance for an example automatic modulation classification (AMC) use-case. Through exhaustive experimentation using carefully curated synthetic datasets with varying signal types, signal-to-noise ratios (SNRs), and frequency offsets (FOs), generalized conclusions are drawn regarding how best to use RF TL techniques for domain adaptation and sequential learning. Consistent with trends identified in other modalities, results show that RF TL performance is highly dependent on the similarity between the source and target domains/tasks. Results also discuss the impacts of channel environment, hardware variations, and domain/task difficulty on RF TL performance, and compare RF TL performance using head re-training and model fine-tuning methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge