An Adaptive Device-Edge Co-Inference Framework Based on Soft Actor-Critic

Paper and Code

Jan 09, 2022

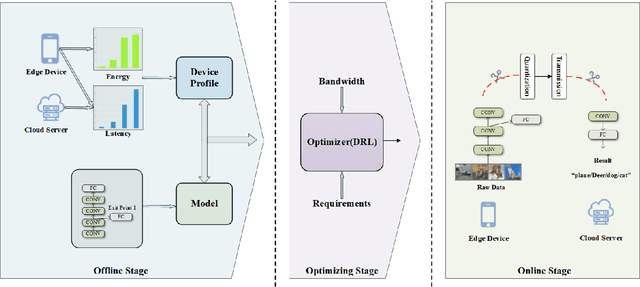

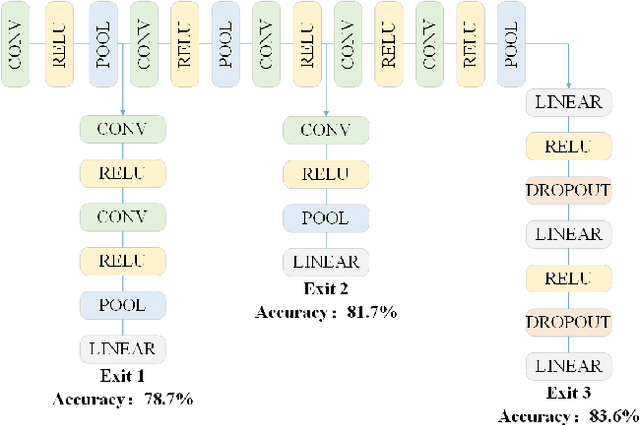

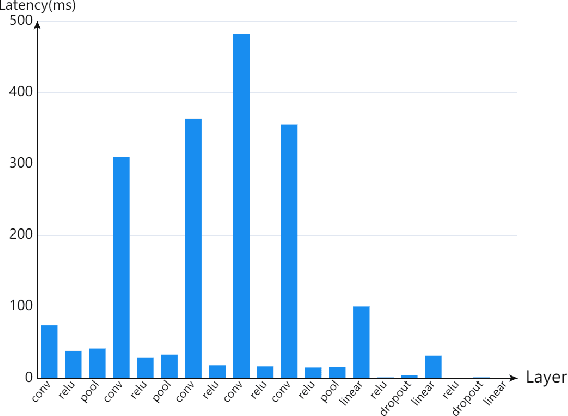

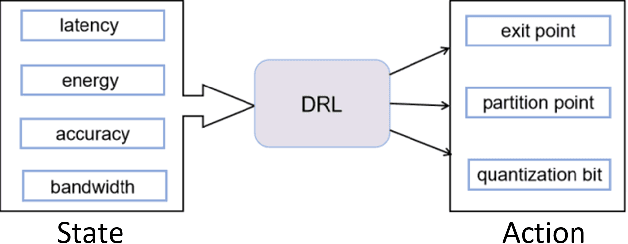

Recently, the applications of deep neural network (DNN) have been very prominent in many fields such as computer vision (CV) and natural language processing (NLP) due to its superior feature extraction performance. However, the high-dimension parameter model and large-scale mathematical calculation restrict the execution efficiency, especially for Internet of Things (IoT) devices. Different from the previous cloud/edge-only pattern that brings huge pressure for uplink communication and device-only fashion that undertakes unaffordable calculation strength, we highlight the collaborative computation between the device and edge for DNN models, which can achieve a good balance between the communication load and execution accuracy. Specifically, a systematic on-demand co-inference framework is proposed to exploit the multi-branch structure, in which the pre-trained Alexnet is right-sized through \emph{early-exit} and partitioned at an intermediate DNN layer. The integer quantization is enforced to further compress transmission bits. As a result, we establish a new Deep Reinforcement Learning (DRL) optimizer-Soft Actor Critic for discrete (SAC-d), which generates the \emph{exit point}, \emph{partition point}, and \emph{compressing bits} by soft policy iterations. Based on the latency and accuracy aware reward design, such an optimizer can well adapt to the complex environment like dynamic wireless channel and arbitrary CPU processing, and is capable of supporting the 5G URLLC. Real-world experiment on Raspberry Pi 4 and PC shows the outperformance of the proposed solution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge