Amplifying the Imitation Effect for Reinforcement Learning of UCAV's Mission Execution

Paper and Code

Jan 17, 2019

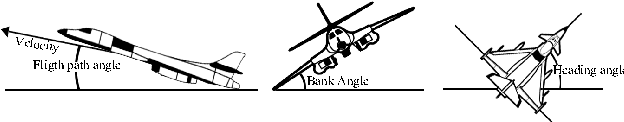

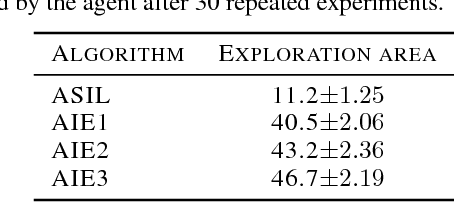

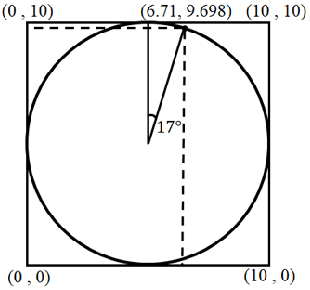

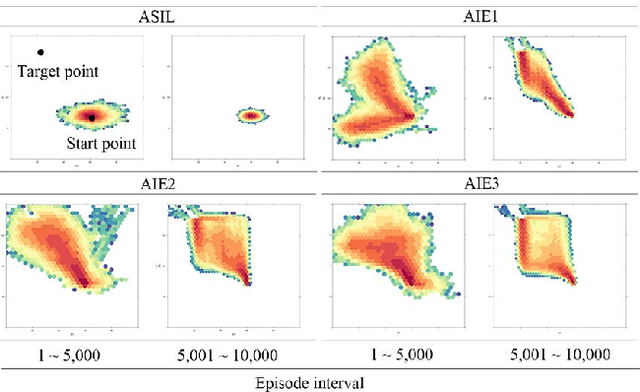

This paper proposes a new reinforcement learning (RL) algorithm that enhances exploration by amplifying the imitation effect (AIE). This algorithm consists of self-imitation learning and random network distillation algorithms. We argue that these two algorithms complement each other and that combining these two algorithms can amplify the imitation effect for exploration. In addition, by adding an intrinsic penalty reward to the state that the RL agent frequently visits and using replay memory for learning the feature state when using an exploration bonus, the proposed approach leads to deep exploration and deviates from the current converged policy. We verified the exploration performance of the algorithm through experiments in a two-dimensional grid environment. In addition, we applied the algorithm to a simulated environment of unmanned combat aerial vehicle (UCAV) mission execution, and the empirical results show that AIE is very effective for finding the UCAV's shortest flight path to avoid an enemy's missiles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge