AMBER -- Advanced SegFormer for Multi-Band Image Segmentation: an application to Hyperspectral Imaging

Paper and Code

Sep 14, 2024

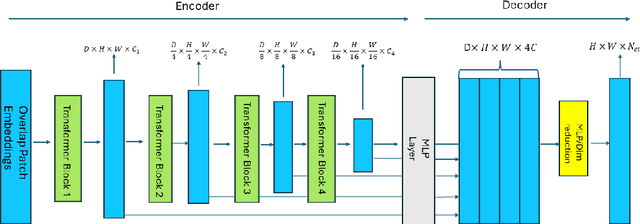

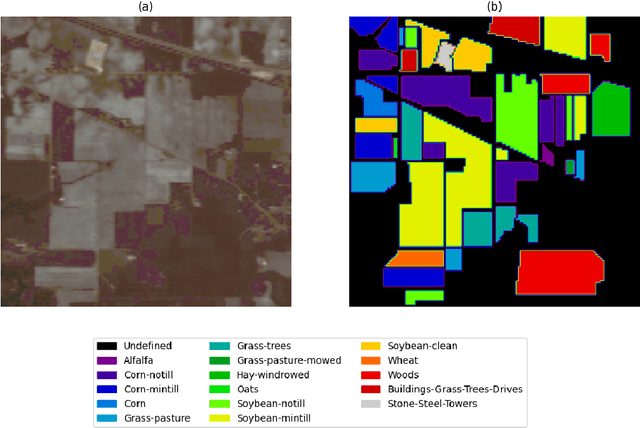

Deep learning has revolutionized the field of hyperspectral image (HSI) analysis, enabling the extraction of complex and hierarchical features. While convolutional neural networks (CNNs) have been the backbone of HSI classification, their limitations in capturing global contextual features have led to the exploration of Vision Transformers (ViTs). This paper introduces AMBER, an advanced SegFormer specifically designed for multi-band image segmentation. AMBER enhances the original SegFormer by incorporating three-dimensional convolutions to handle hyperspectral data. Our experiments, conducted on the Indian Pines, Pavia University, and PRISMA datasets, show that AMBER outperforms traditional CNN-based methods in terms of Overall Accuracy, Kappa coefficient, and Average Accuracy on the first two datasets, and achieves state-of-the-art performance on the PRISMA dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge