Alternative models: Critical examination of disability definitions in the development of artificial intelligence technologies

Paper and Code

Jun 16, 2022

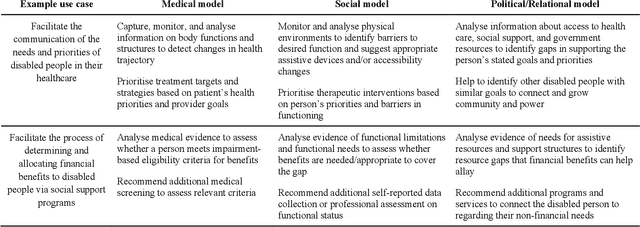

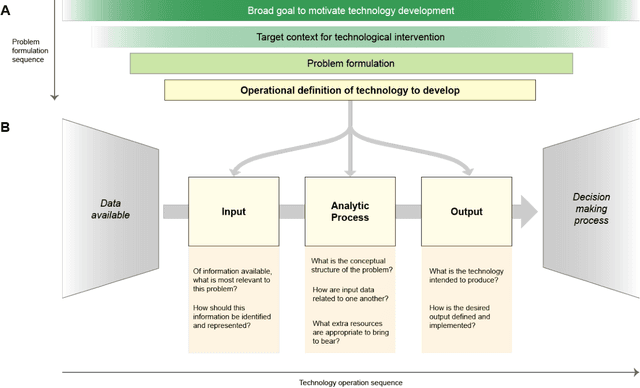

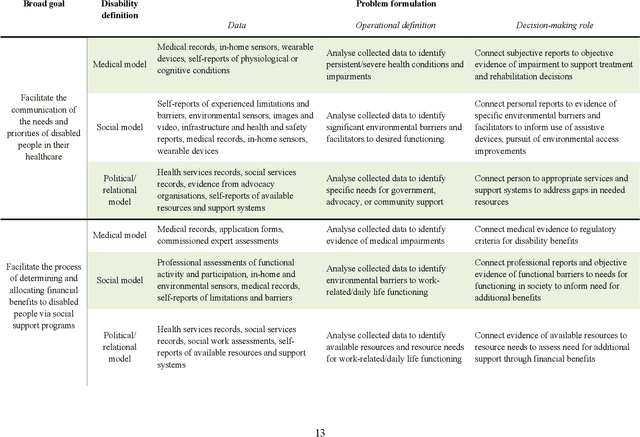

Disabled people are subject to a wide variety of complex decision-making processes in diverse areas such as healthcare, employment, and government policy. These contexts, which are already often opaque to the people they affect and lack adequate representation of disabled perspectives, are rapidly adopting artificial intelligence (AI) technologies for data analytics to inform decision making, creating an increased risk of harm due to inappropriate or inequitable algorithms. This article presents a framework for critically examining AI data analytics technologies through a disability lens and investigates how the definition of disability chosen by the designers of an AI technology affects its impact on disabled subjects of analysis. We consider three conceptual models of disability: the medical model, the social model, and the relational model; and show how AI technologies designed under each of these models differ so significantly as to be incompatible with and contradictory to one another. Through a discussion of common use cases for AI analytics in healthcare and government disability benefits, we illustrate specific considerations and decision points in the technology design process that affect power dynamics and inclusion in these settings and help determine their orientation towards marginalisation or support. The framework we present can serve as a foundation for in-depth critical examination of AI technologies and the development of a design praxis for disability-related AI analytics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge