AI Forensics: Did the Artificial Intelligence System Do It? Why?

Paper and Code

May 27, 2020

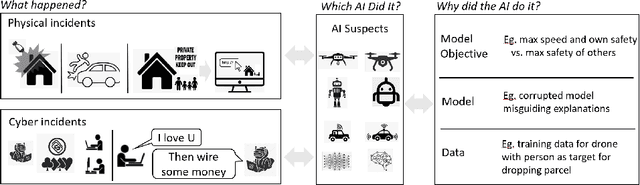

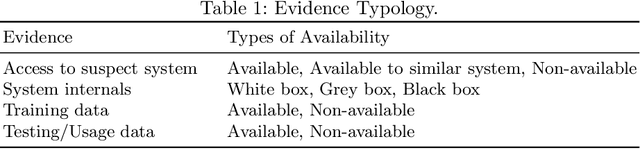

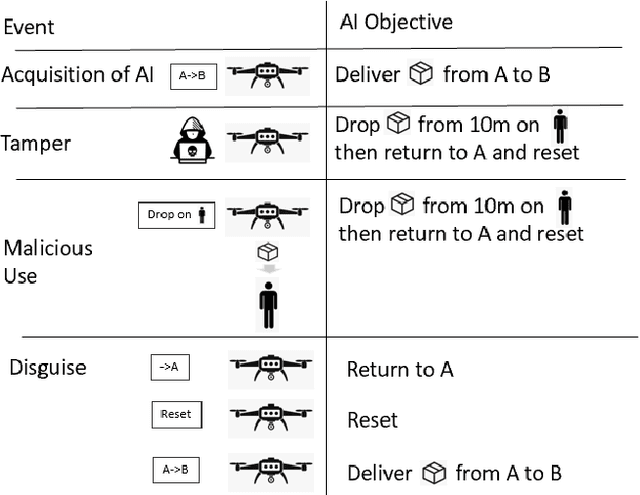

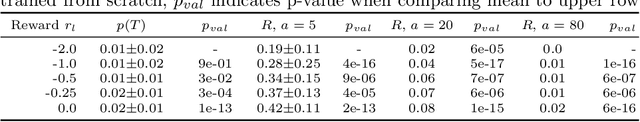

In an increasingly autonomous manner AI systems make decisions impacting our daily life. Their actions might cause accidents, harm or, more generally, violate regulations -- either intentionally or not. Thus, AI systems might be considered suspects for various events. Therefore, it is essential to relate particular events to an AI, its owner and its creator. Given a multitude of AI systems from multiple manufactures, potentially, altered by their owner or changing through self-learning, this seems non-trivial. This paper discusses how to identify AI systems responsible for incidents as well as their motives that might be "malicious by design". In addition to a conceptualization, we conduct two case studies based on reinforcement learning and convolutional neural networks to illustrate our proposed methods and challenges. Our cases illustrate that "catching AI systems" seems often far from trivial and requires extensive expertise in machine learning. Legislative measures that enforce mandatory information to be collected during operation of AI systems as well as means to uniquely identify systems might facilitate the problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge