Aggregating Gradients in Encoded Domain for Federated Learning

Paper and Code

Jun 09, 2022

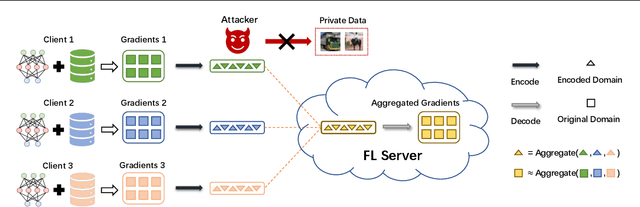

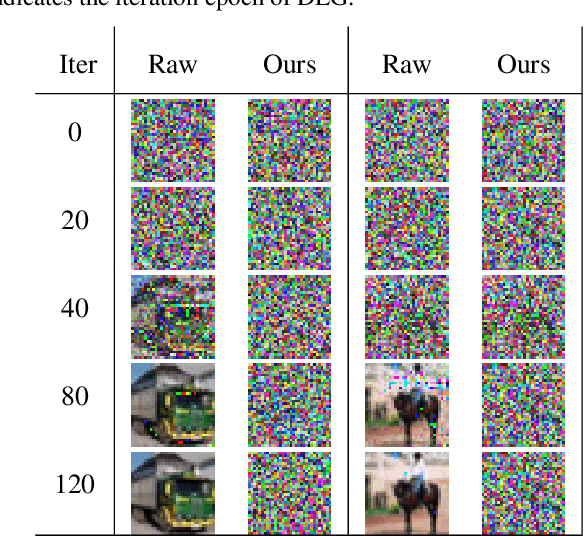

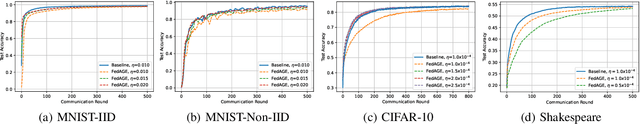

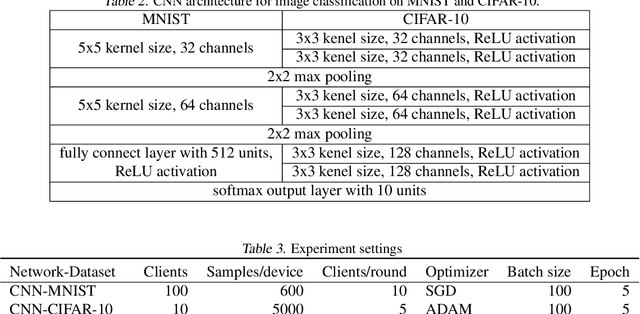

Malicious attackers and an honest-but-curious server can steal private client data from uploaded gradients in federated learning. Although current protection methods (e.g., additive homomorphic cryptosystem) can guarantee the security of the federated learning system, they bring additional computation and communication costs. To mitigate the cost, we propose the \texttt{FedAGE} framework, which enables the server to aggregate gradients in an encoded domain without accessing raw gradients of any single client. Thus, \texttt{FedAGE} can prevent the curious server from gradient stealing while maintaining the same prediction performance without additional communication costs. Furthermore, we theoretically prove that the proposed encoding-decoding framework is a Gaussian mechanism for differential privacy. Finally, we evaluate \texttt{FedAGE} under several federated settings, and the results have demonstrated the efficacy of the proposed framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge