AETomo-Net: A Novel Deep Learning Network for Tomographic SAR Imaging Based on Multi-dimensional Features

Paper and Code

Sep 21, 2022

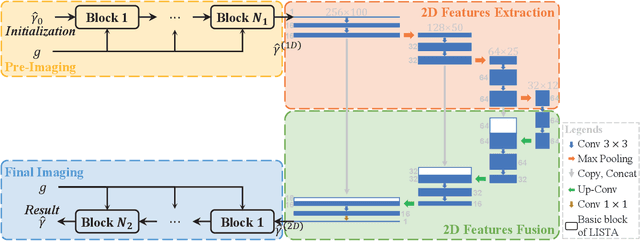

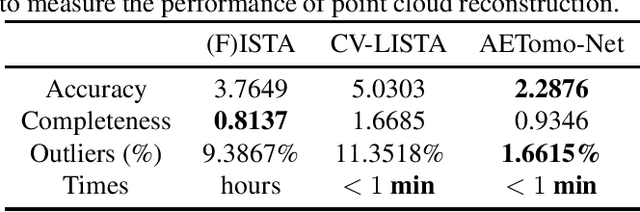

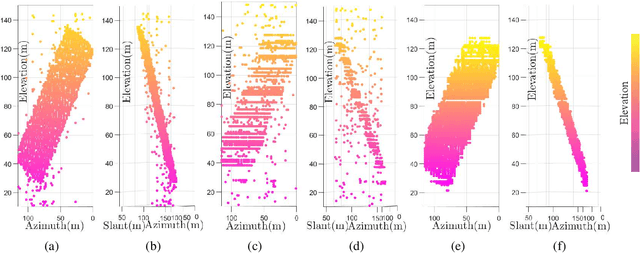

Tomographic synthetic aperture radar (TomoSAR) imaging algorithms based on deep learning can effectively reduce computational costs. The idea of existing researches is to reconstruct the elevation for each range-azimuth cell in one-dimensional using a deep-unfolding network. However, since these methods are commonly sensitive to signal sparsity level, it usually leads to some drawbacks like continuous surface fractures, too many outliers, \textit{et al}. To address them, in this paper, a novel imaging network (AETomo-Net) based on multi-dimensional features is proposed. By adding a U-Net-like structure, AETomo-Net performs reconstruction by each azimuth-elevation slice and adds 2D features extraction and fusion capabilities to the original deep unrolling network. In this way, each azimuth-elevation slice can be reconstructed with richer features and the quality of the imaging results will be improved. Experiments show that the proposed method can effectively solve the above defects while ensuring imaging accuracy and computation speed compared with the traditional ISTA-based method and CV-LISTA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge