Adversarial Training Generalizes Data-dependent Spectral Norm Regularization

Paper and Code

Jun 17, 2019

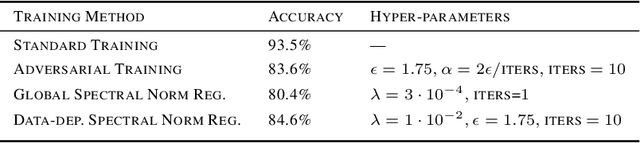

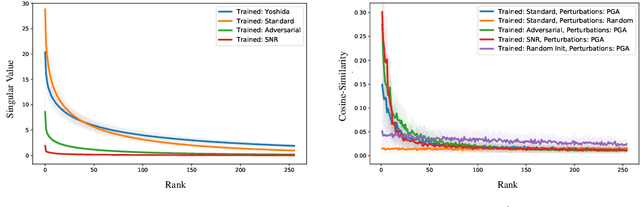

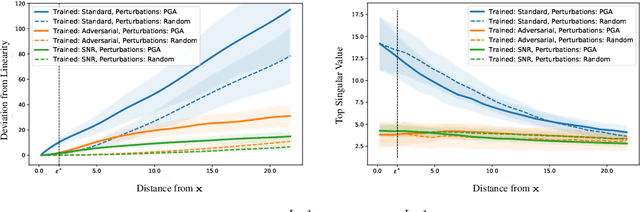

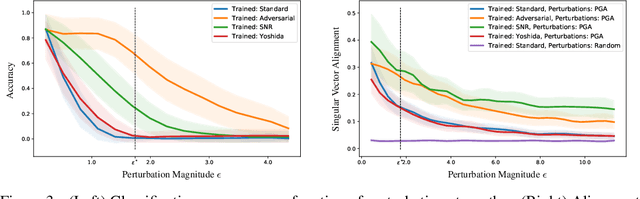

We establish a theoretical link between adversarial training and operator norm regularization for deep neural networks. Specifically, we show that adversarial training is a data-dependent generalization of spectral norm regularization. This intriguing connection provides fundamental insights into the origin of adversarial vulnerability and hints at novel ways to robustify and defend against adversarial attacks. We provide extensive empirical evidence to support our theoretical results.

View paper on

OpenReview

OpenReview

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge