Adversarial Sampling and Training for Semi-Supervised Information Retrieval

Paper and Code

Nov 09, 2018

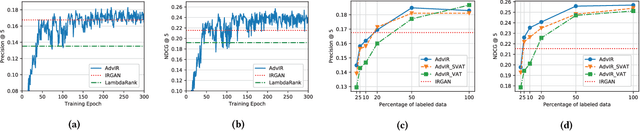

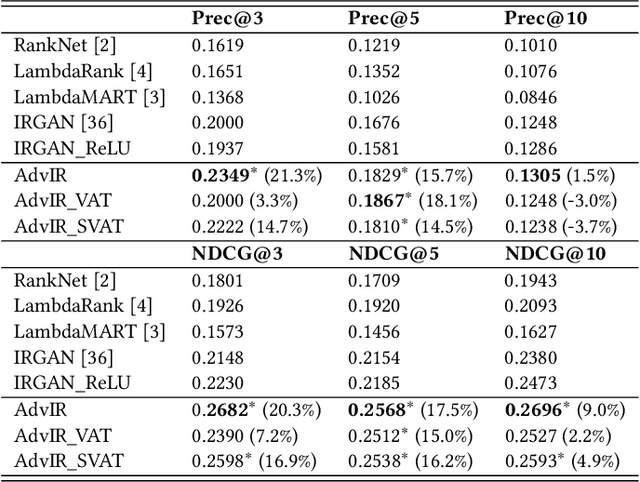

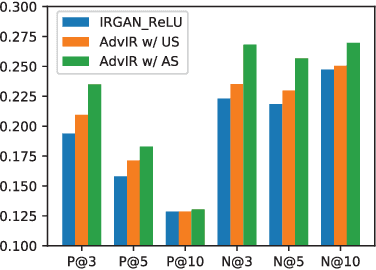

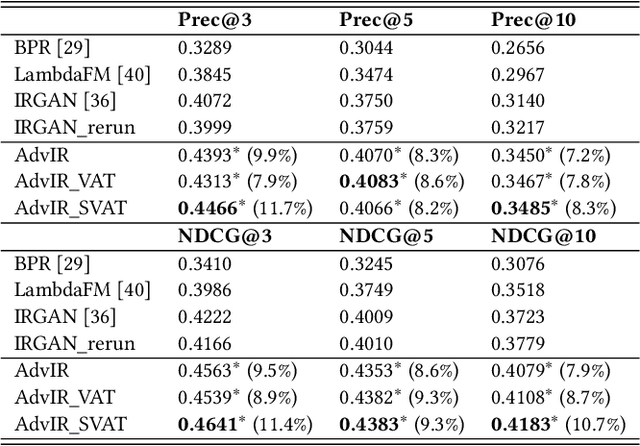

Modern ad-hoc retrieval models learned with implicit feedback have two problems in general. First, there are usually much more non-clicked documents than clicked documents, and many of the non-clicked documents are not informational. Second, modern ad-hoc retrieval models are vulnerable to adversarial examples due to the linear nature in the models. To solve the problems at the same time, we propose adversarial training methods that can overcome those weaknesses. Our key idea is to combine adversarial training with adversarial sampling in order to obtain very difficult examples, which are informational and can attack the linear nature of the models. Specifically, we adversarially sample difficult training examples, and based on them, we further generate adversarial examples that are even more difficult. To make the models robust, the generated adversarial examples as well as the original training examples are then given to the models for joint optimization. Experiments are performed on benchmark data sets for common ad-hoc retrieval tasks such as Web search, item recommendation, and question answering. The proposed methods are closely compared with IRGAN, which is a recent relevant approach that employs adversarial training. Experiment results indicate that the proposed methods significantly outperform strong baselines especially for high-ranked documents, and they outperform IRGAN in NDCG@5 using only 5% of labeled data for the Web search task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge