Adversarial Noise Layer: Regularize Neural Network By Adding Noise

Paper and Code

Oct 30, 2018

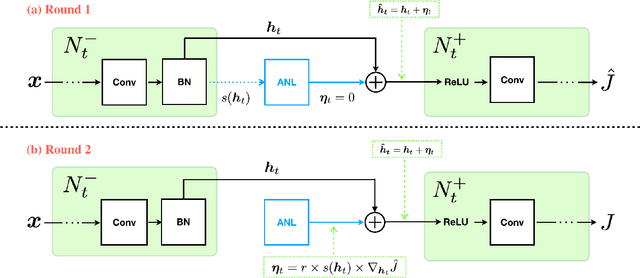

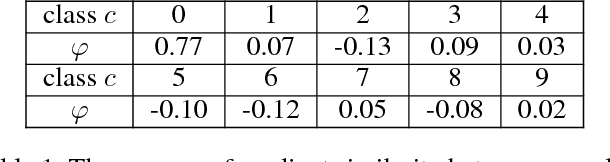

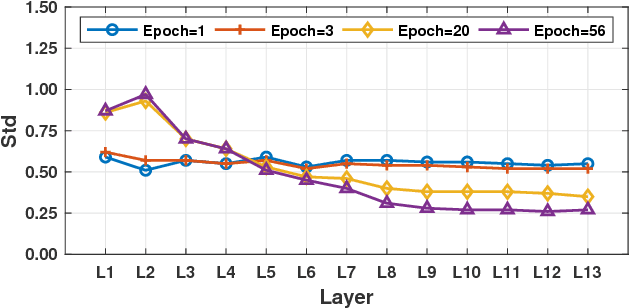

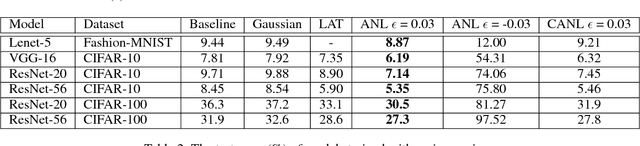

In this paper, we introduce a novel regularization method called Adversarial Noise Layer (ANL) and its efficient version called Class Adversarial Noise Layer (CANL), which are able to significantly improve CNN's generalization ability by adding carefully crafted noise into the intermediate layer activations. ANL and CANL can be easily implemented and integrated with most of the mainstream CNN-based models. We compared the effects of the different types of noise and visually demonstrate that our proposed adversarial noise instruct CNN models to learn to extract cleaner feature maps, which further reduce the risk of over-fitting. We also conclude that models trained with ANL or CANL are more robust to the adversarial examples generated by FGSM than the traditional adversarial training approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge