Adversarial Evaluation of Autonomous Vehicles in Lane-Change Scenarios

Paper and Code

Apr 14, 2020

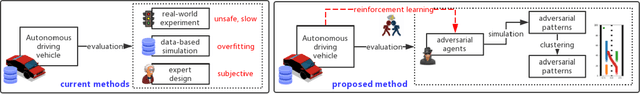

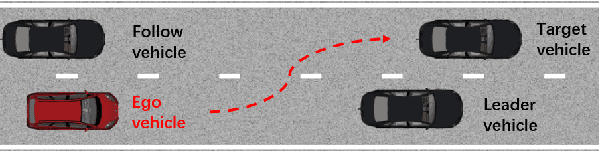

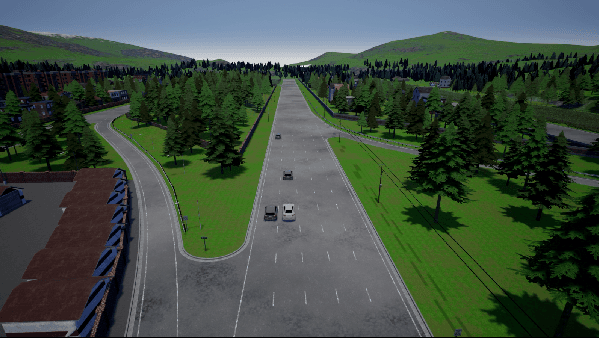

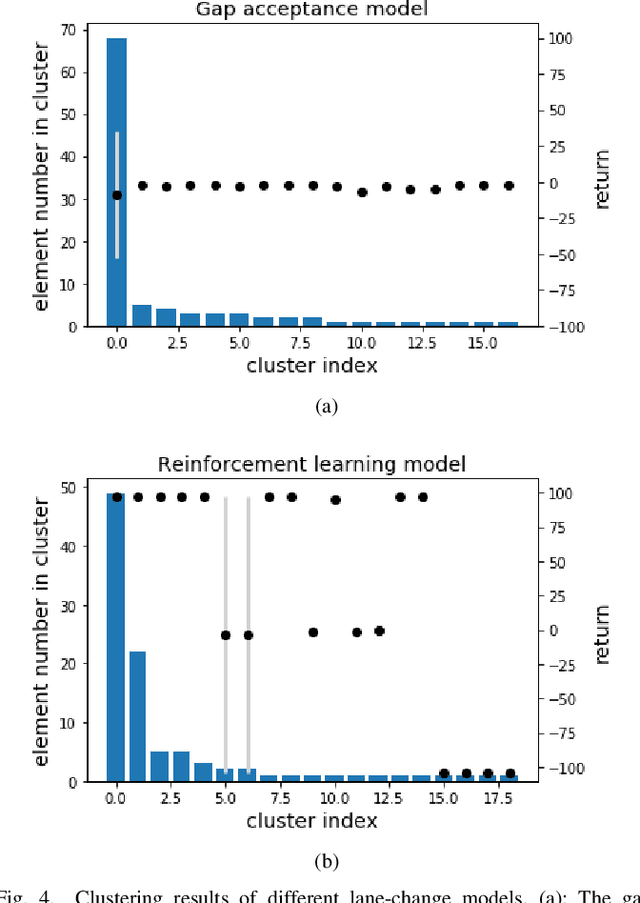

Autonomous vehicles must be comprehensively evaluated before deployed in cities and highways. Current evaluation procedures lack the abilities of weakness-aiming and evolving, thus they could hardly generate adversarial environments for autonomous vehicles, leading to insufficient challenges. To overcome the shortage of static evaluation methods, this paper proposes a novel method to generate adversarial environments with deep reinforcement learning, and to cluster them with a nonparametric Bayesian method. As a representative task of autonomous driving, lane-change is used to demonstrate the superiority of the proposed method. First, two lane-change models are separately developed by a rule-based method and a learning-based method, waiting for evaluation and comparison. Next, adversarial environments are generated by training surrounding interactive vehicles with deep reinforcement learning for local optimal ensembles. Then, a nonparametric Bayesian approach is utilized to cluster the adversarial policies of the interactive vehicles. Finally, the adversarial environment patterns are illustrated and the performances of two lane-change models are evaluated and compared. The simulation results indicate that both models perform significantly worse in adversarial environments than in naturalistic environments, with plenty of weaknesses successfully extracted in a few tests.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge