Adversarial Eigen Attack on Black-Box Models

Paper and Code

Aug 27, 2020

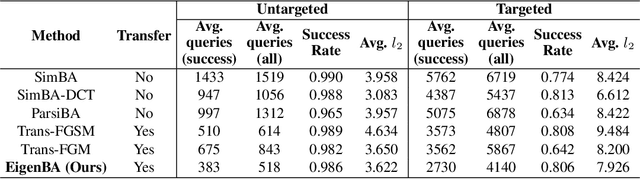

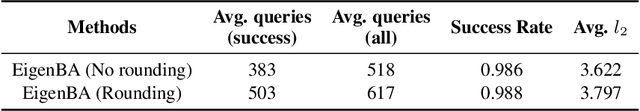

Black-box adversarial attack has attracted a lot of research interests for its practical use in AI safety. Compared with the white-box attack, a black-box setting is more difficult for less available information related to the attacked model and the additional constraint on the query budget. A general way to improve the attack efficiency is to draw support from a pre-trained transferable white-box model. In this paper, we propose a novel setting of transferable black-box attack: attackers may use external information from a pre-trained model with available network parameters, however, different from previous studies, no additional training data is permitted to further change or tune the pre-trained model. To this end, we further propose a new algorithm, EigenBA to tackle this problem. Our method aims to explore more gradient information of the black-box model, and promote the attack efficiency, while keeping the perturbation to the original attacked image small, by leveraging the Jacobian matrix of the pre-trained white-box model. We show the optimal perturbations are closely related to the right singular vectors of the Jacobian matrix. Further experiments on ImageNet and CIFAR-10 show that even the unlearnable pre-trained white-box model could also significantly boost the efficiency of the black-box attack and our proposed method could further improve the attack efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge