Adversarial $α$-divergence Minimization for Bayesian Approximate Inference

Paper and Code

Sep 18, 2019

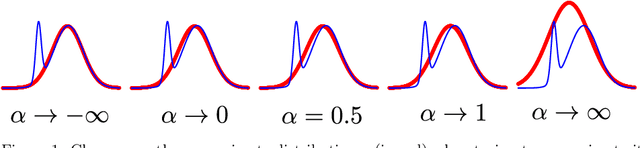

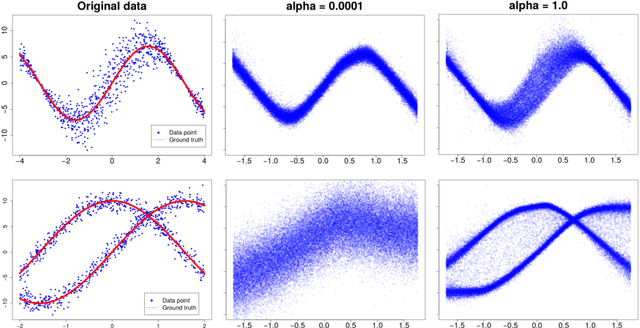

Neural networks are popular models for regression. They are often trained via back-propagation to find a value of the weights that correctly predicts the observed data. Although back-propagation has shown good performance in many applications, it cannot easily output an estimate of the uncertainty in the predictions made. Measuring this uncertainty in the predictions of machine learning models is a critical aspect with important applications. Uncertainty estimates can be obtained by following a Bayesian approach in which a posterior distribution of the model parameters is computed. The posterior distribution summarizes which parameter values are compatible with the data. Typically,this posterior distribution is intractable and has to be approximated. Several approaches have been considered for solving this problem. We propose here a general method for approximate Bayesian inference based on minimizing{\alpha}-divergences which allows for flexible approximate distributions. The method is evaluated in the context of Bayesian neural networks for regression on extensive experiments. The results show that it often gives better performance in terms of the test log-likelihood and sometimes in terms of the squared error.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge