Adversarial Conversational Shaping for Intelligent Agents

Paper and Code

Jul 20, 2023

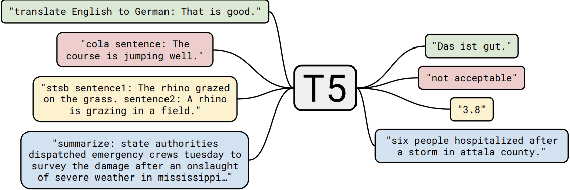

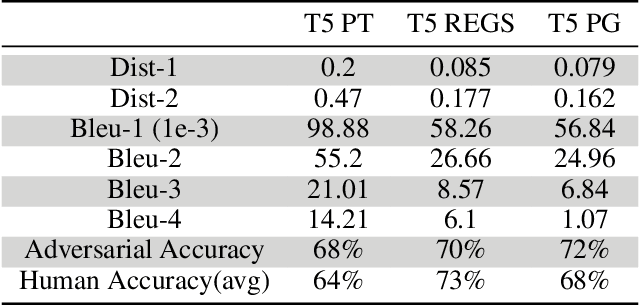

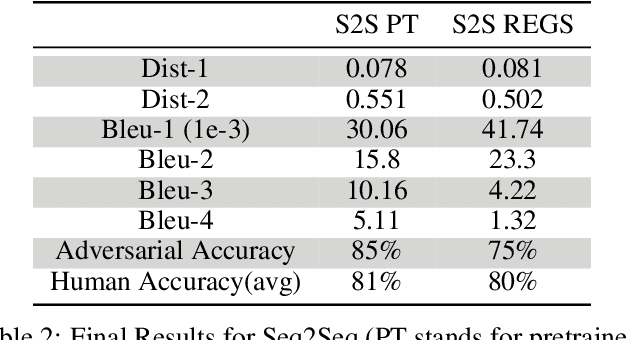

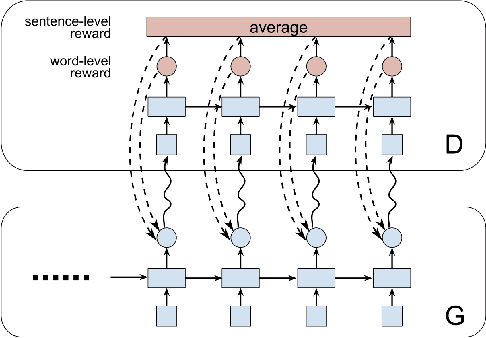

The recent emergence of deep learning methods has enabled the research community to achieve state-of-the art results in several domains including natural language processing. However, the current robocall system remains unstable and inaccurate: text generator and chat-bots can be tedious and misunderstand human-like dialogue. In this work, we study the performance of two models able to enhance an intelligent conversational agent through adversarial conversational shaping: a generative adversarial network with policy gradient (GANPG) and a generative adversarial network with reward for every generation step (REGS) based on the REGS model presented in Li et al. [18] . This model is able to assign rewards to both partially and fully generated text sequences. We discuss performance with different training details : seq2seq [ 36] and transformers [37 ] in a reinforcement learning framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge