Adiabatic Quantum Linear Regression

Paper and Code

Aug 05, 2020

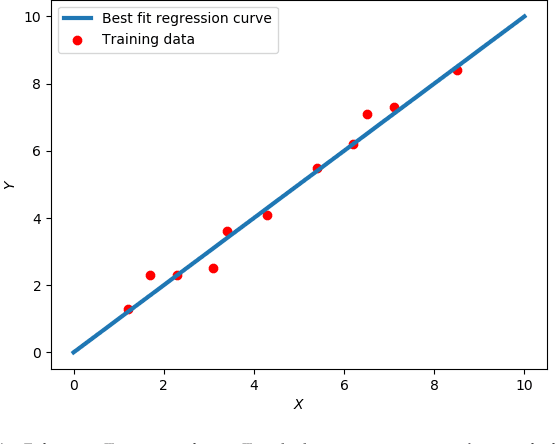

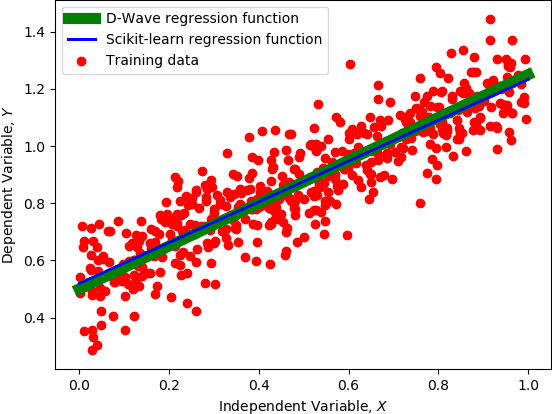

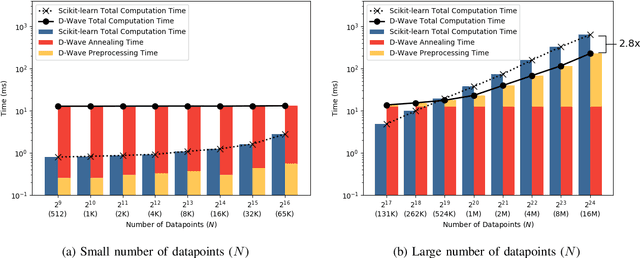

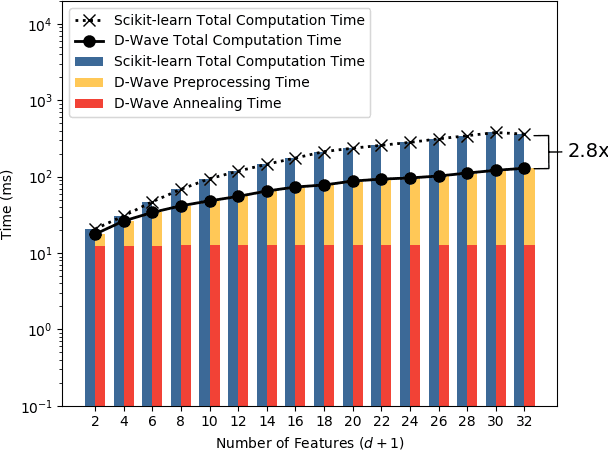

A major challenge in machine learning is the computational expense of training these models. Model training can be viewed as a form of optimization used to fit a machine learning model to a set of data, which can take up significant amount of time on classical computers. Adiabatic quantum computers have been shown to excel at solving optimization problems, and therefore, we believe, present a promising alternative to improve machine learning training times. In this paper, we present an adiabatic quantum computing approach for training a linear regression model. In order to do this, we formulate the regression problem as a quadratic unconstrained binary optimization (QUBO) problem. We analyze our quantum approach theoretically, test it on the D-Wave 2000Q adiabatic quantum computer and compare its performance to a classical approach that uses the Scikit-learn library in Python. Our analysis shows that the quantum approach attains up to 2.8x speedup over the classical approach on larger datasets, and performs at par with the classical approach on the regression error metric.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge