Adaptive Structural Hyper-Parameter Configuration by Q-Learning

Paper and Code

Mar 02, 2020

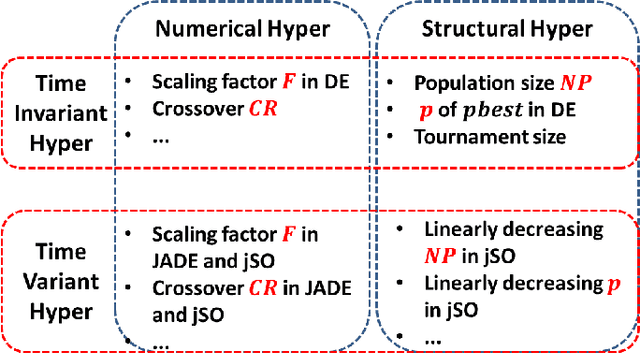

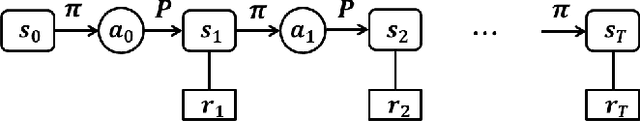

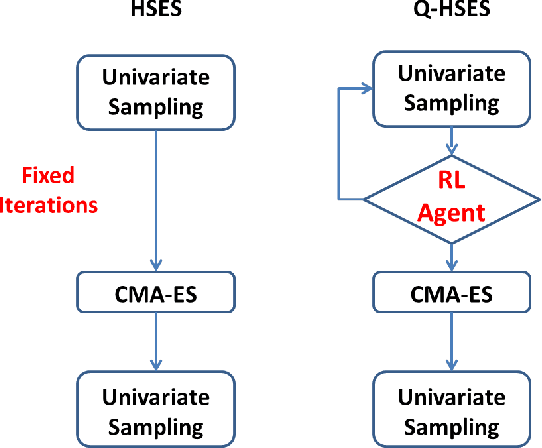

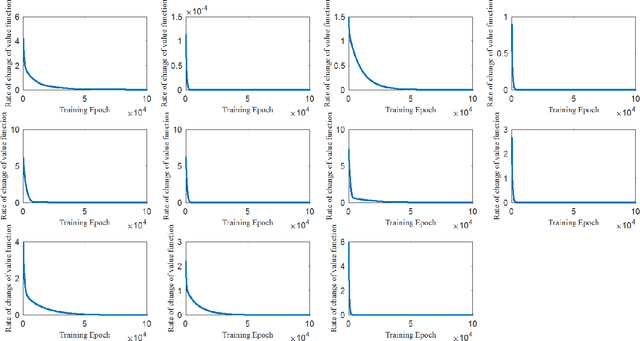

Tuning hyper-parameters for evolutionary algorithms is an important issue in computational intelligence. Performance of an evolutionary algorithm depends not only on its operation strategy design, but also on its hyper-parameters. Hyper-parameters can be categorized in two dimensions as structural/numerical and time-invariant/time-variant. Particularly, structural hyper-parameters in existing studies are usually tuned in advance for time-invariant parameters, or with hand-crafted scheduling for time-invariant parameters. In this paper, we make the first attempt to model the tuning of structural hyper-parameters as a reinforcement learning problem, and present to tune the structural hyper-parameter which controls computational resource allocation in the CEC 2018 winner algorithm by Q-learning. Experimental results show favorably against the winner algorithm on the CEC 2018 test functions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge