Adaptive Private-K-Selection with Adaptive K and Application to Multi-label PATE

Paper and Code

Mar 30, 2022

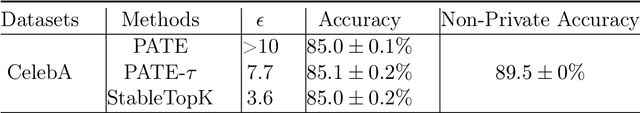

We provide an end-to-end Renyi DP based-framework for differentially private top-$k$ selection. Unlike previous approaches, which require a data-independent choice on $k$, we propose to privately release a data-dependent choice of $k$ such that the gap between $k$-th and the $(k+1)$st "quality" is large. This is achieved by a novel application of the Report-Noisy-Max. Not only does this eliminate one hyperparameter, the adaptive choice of $k$ also certifies the stability of the top-$k$ indices in the unordered set so we can release them using a variant of propose-test-release (PTR) without adding noise. We show that our construction improves the privacy-utility trade-offs compared to the previous top-$k$ selection algorithms theoretically and empirically. Additionally, we apply our algorithm to "Private Aggregation of Teacher Ensembles (PATE)" in multi-label classification tasks with a large number of labels and show that it leads to significant performance gains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge