Adaptive Image Inpainting

Paper and Code

Jan 01, 2022

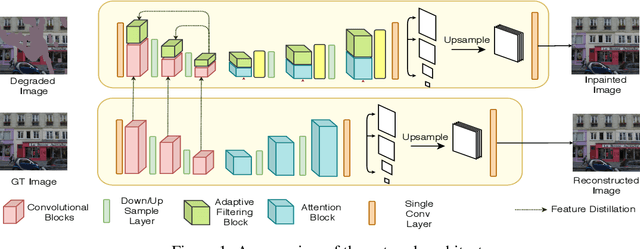

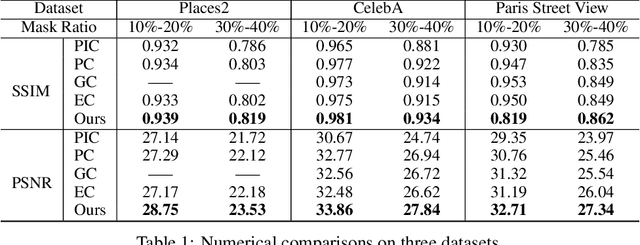

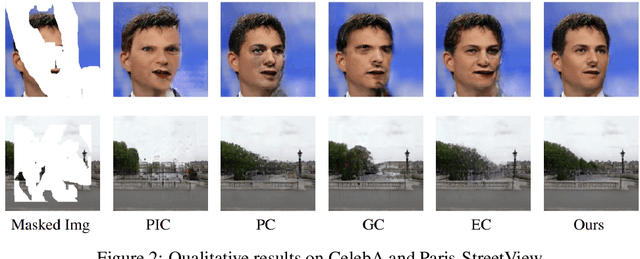

Image inpainting methods have shown significant improvements by using deep neural networks recently. However, many of these techniques often create distorted structures or blurry textures inconsistent with surrounding areas. The problem is rooted in the encoder layers' ineffectiveness in building a complete and faithful embedding of the missing regions. To address this problem, two-stage approaches deploy two separate networks for a coarse and fine estimate of the inpainted image. Some approaches utilize handcrafted features like edges or contours to guide the reconstruction process. These methods suffer from huge computational overheads owing to multiple generator networks, limited ability of handcrafted features, and sub-optimal utilization of the information present in the ground truth. Motivated by these observations, we propose a distillation based approach for inpainting, where we provide direct feature level supervision for the encoder layers in an adaptive manner. We deploy cross and self distillation techniques and discuss the need for a dedicated completion-block in encoder to achieve the distillation target. We conduct extensive evaluations on multiple datasets to validate our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge