Adaptive Automotive Radar data Acquisition

Paper and Code

Oct 05, 2020

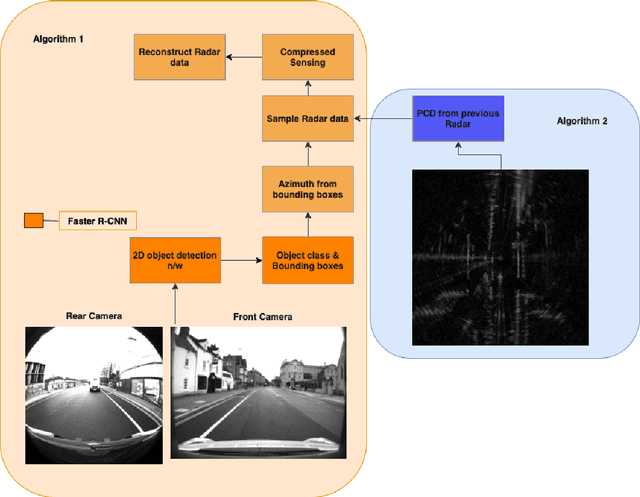

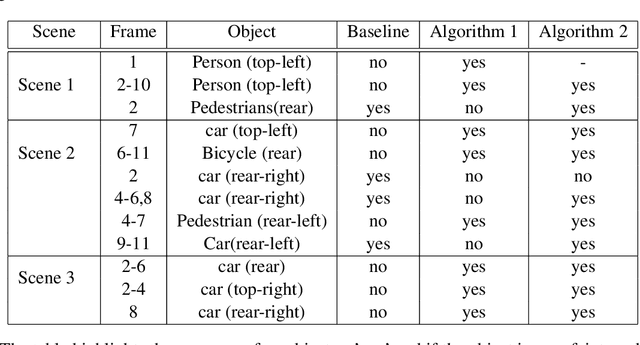

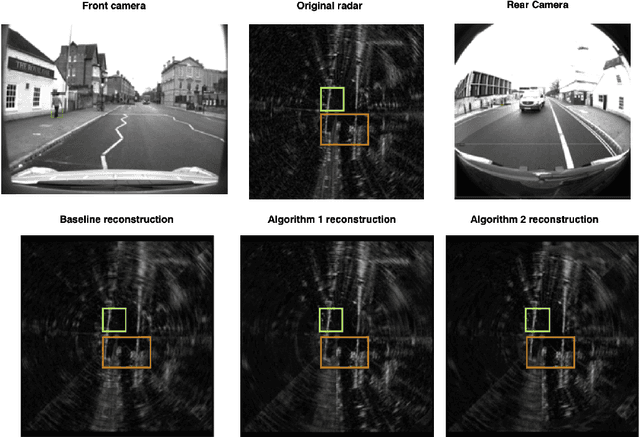

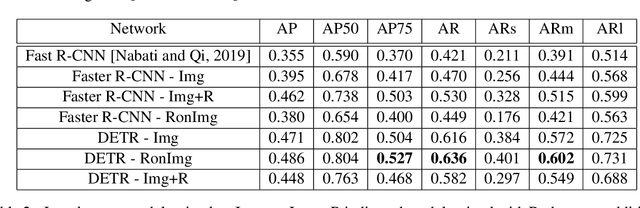

In an autonomous driving scenario, it is vital to acquire and efficiently process data from various sensors to obtain a complete and robust perspective of the surroundings. Many studies have shown the importance of having radar data in addition to images since radar is robust to weather conditions. We develop a novel algorithm for selecting radar return regions to be sampled at a higher rate based on prior reconstructed radar frames and image data. Our approach uses adaptive block-based Compressed Sensing(CS) to allocate higher sampling rates to "important" blocks dynamically while maintaining the overall sampling budget per frame. This improves over block-based CS, which parallelizes computation by dividing the radar frame into blocks. Additionally, we use the Faster R-CNN object detection network to determine these important blocks from previous radar and image information. This mitigates the potential information loss of an object missed by the image or the object detection network. We also develop an end-to-end transformer-based 2D object detection network using the NuScenes radar and image data. Finally, we compare the performance of our algorithm against that of standard CS on the Oxford Radar RobotCar dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge