Adapting Resilient Propagation for Deep Learning

Paper and Code

Sep 16, 2015

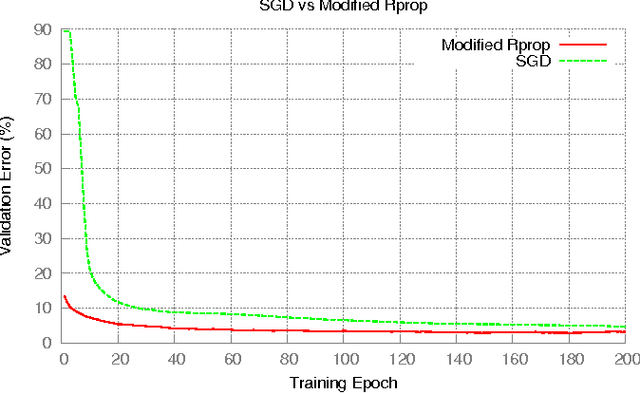

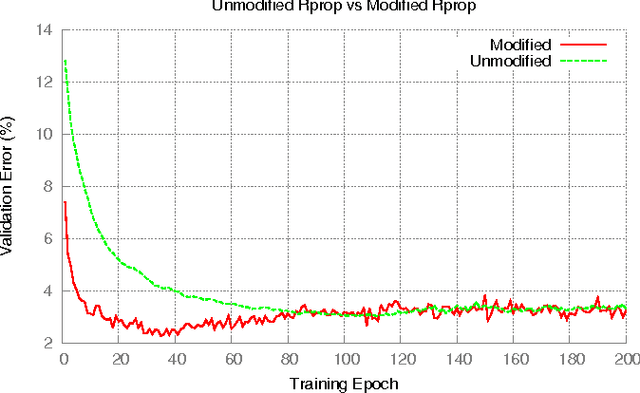

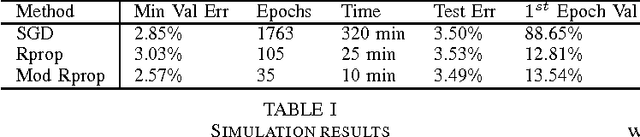

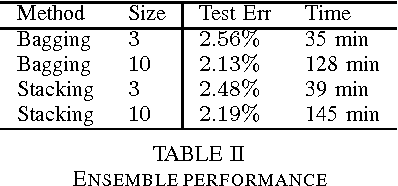

The Resilient Propagation (Rprop) algorithm has been very popular for backpropagation training of multilayer feed-forward neural networks in various applications. The standard Rprop however encounters difficulties in the context of deep neural networks as typically happens with gradient-based learning algorithms. In this paper, we propose a modification of the Rprop that combines standard Rprop steps with a special drop out technique. We apply the method for training Deep Neural Networks as standalone components and in ensemble formulations. Results on the MNIST dataset show that the proposed modification alleviates standard Rprop's problems demonstrating improved learning speed and accuracy.

* Published in the proceedings of the UK workshop on Computational

Intelligence 2015 (UKCI)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge