Adaptable Text Matching via Meta-Weight Regulator

Paper and Code

Apr 27, 2022

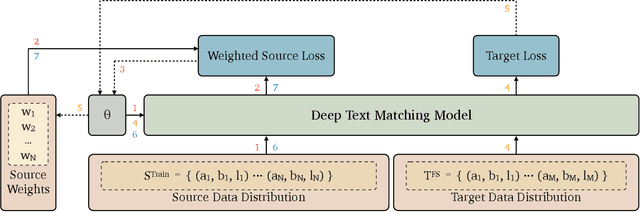

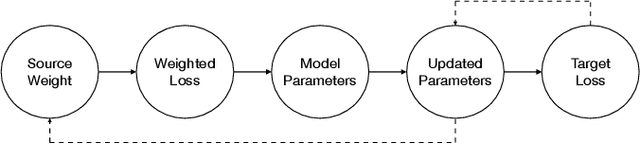

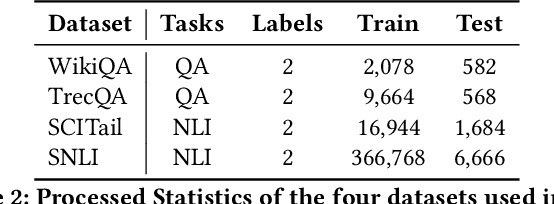

Neural text matching models have been used in a range of applications such as question answering and natural language inference, and have yielded a good performance. However, these neural models are of a limited adaptability, resulting in a decline in performance when encountering test examples from a different dataset or even a different task. The adaptability is particularly important in the few-shot setting: in many cases, there is only a limited amount of labeled data available for a target dataset or task, while we may have access to a richly labeled source dataset or task. However, adapting a model trained on the abundant source data to a few-shot target dataset or task is challenging. To tackle this challenge, we propose a Meta-Weight Regulator (MWR), which is a meta-learning approach that learns to assign weights to the source examples based on their relevance to the target loss. Specifically, MWR first trains the model on the uniformly weighted source examples, and measures the efficacy of the model on the target examples via a loss function. By iteratively performing a (meta) gradient descent, high-order gradients are propagated to the source examples. These gradients are then used to update the weights of source examples, in a way that is relevant to the target performance. As MWR is model-agnostic, it can be applied to any backbone neural model. Extensive experiments are conducted with various backbone text matching models, on four widely used datasets and two tasks. The results demonstrate that our proposed approach significantly outperforms a number of existing adaptation methods and effectively improves the cross-dataset and cross-task adaptability of the neural text matching models in the few-shot setting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge