Active Learning with Multiple Kernels

Paper and Code

May 07, 2020

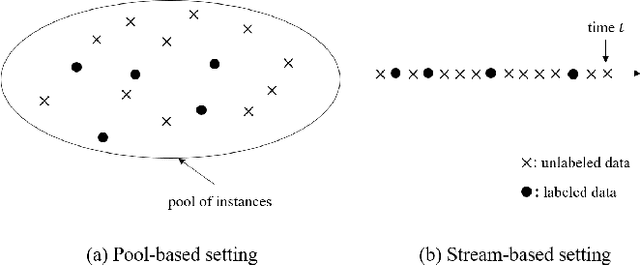

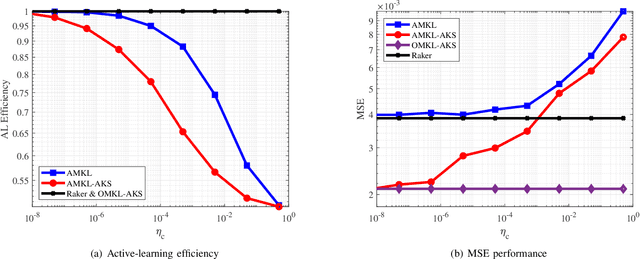

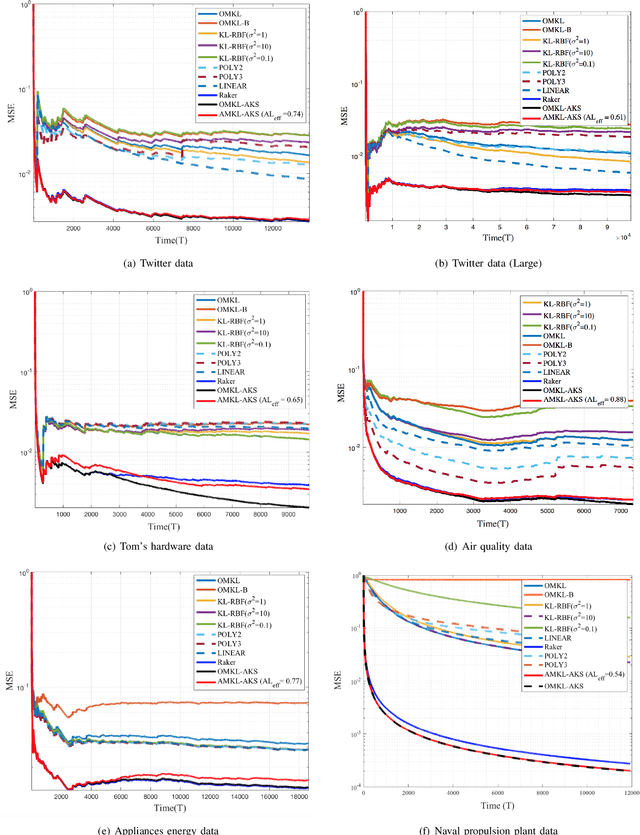

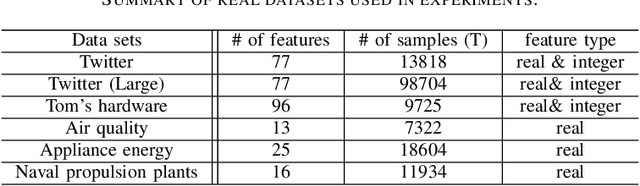

Online multiple kernel learning (OMKL) has provided an attractive performance in nonlinear function learning tasks. Leveraging a random feature approximation, the major drawback of OMKL, known as the curse of dimensionality, has been recently alleviated. In this paper, we introduce a new research problem, termed (stream-based) active multiple kernel learning (AMKL), in which a learner is allowed to label selected data from an oracle according to a selection criterion. This is necessary in many real-world applications as acquiring true labels is costly or time-consuming. We prove that AMKL achieves an optimal sublinear regret, implying that the proposed selection criterion indeed avoids unuseful label-requests. Furthermore, we propose AMKL with an adaptive kernel selection (AMKL-AKS) in which irrelevant kernels can be excluded from a kernel dictionary 'on the fly'. This approach can improve the efficiency of active learning as well as the accuracy of a function approximation. Via numerical tests with various real datasets, it is demonstrated that AMKL-AKS yields a similar or better performance than the best-known OMKL, with a smaller number of labeled data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge