Active Learning and CSI acquisition for mmWave Initial Alignment

Paper and Code

Dec 19, 2018

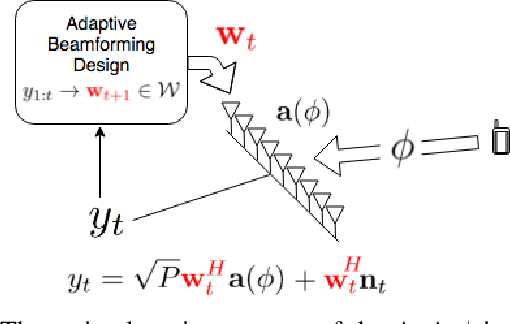

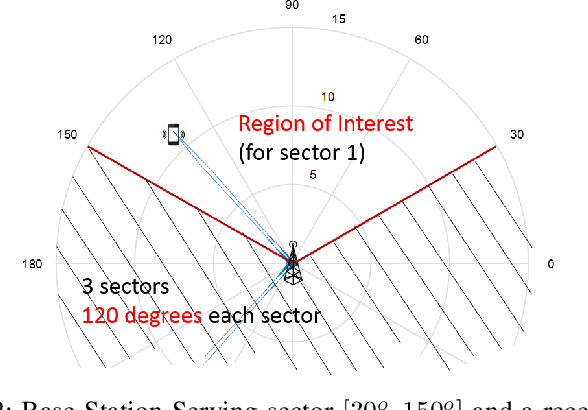

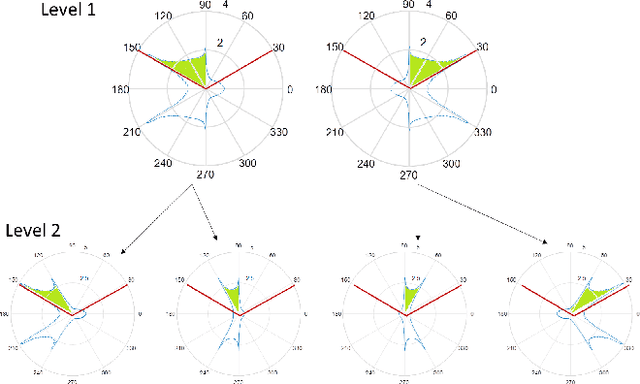

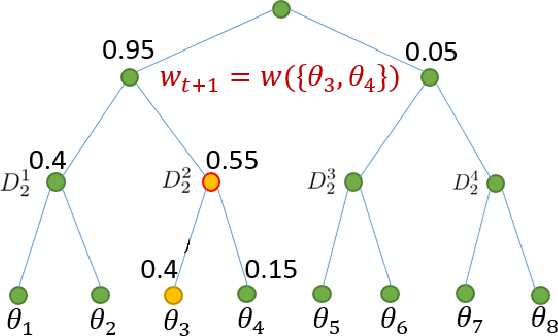

Millimeter wave (mmWave) communication with large antenna arrays is a promising technique to enable extremely high data rates due to large available bandwidth. Given the knowledge of an optimal directional beamforming vector, large antenna arrays have been shown to overcome both the severe signal attenuation in mmWave. However, fundamental limits and achievable learning of an optimal beamforming vector remain. This paper considers the problem of adaptive and sequential optimization of the beamforming vectors during the initial access phase of communication. With a single-path channel model, the problem is reduced to actively learning the Angle-of-Arrival (AoA) of the signal sent from the user to the Base Station (BS). Drawing on the recent results in the design of a hierarchical beamforming codebook [1], sequential measurement dependent noisy search [2], and active learning from an imperfect labeler [3], an adaptive and sequential alignment algorithm is proposed. For any given resolution and error probability of the estimated AoA, an upper bound on the expected search time of the proposed algorithm is derived via the Extrinsic Jensen Shannon Divergence. The upper bound demonstrates that the search time of the proposed algorithm asymptotically matches the performance of the noiseless bisection search up to a constant factor characterizing the AoA acquisition rate. Furthermore, the acquired AoA error probability decays exponentially fast with the search time with an exponent that is a decreasing function of the acquisition rate.Numerically, the proposed algorithm is compared with prior work where a significant improvement of the system communication rate is observed. Most notably, in the relevant regime of low (- 10dB to 5dB) raw SNR, this establishes the first practically viable solution for initial access and, hence, the first demonstration of stand-alone mmWave communication.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge