Active-LATHE: An Active Learning Algorithm for Boosting the Error Exponent for Learning Homogeneous Ising Trees

Paper and Code

Oct 28, 2021

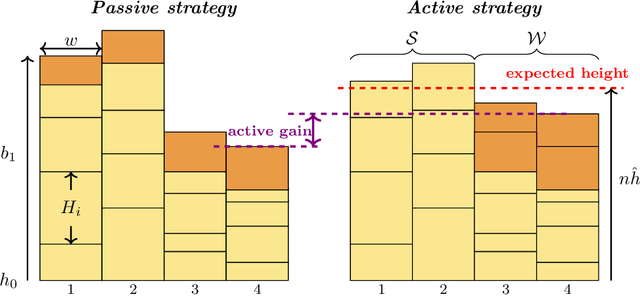

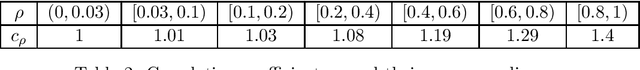

The Chow-Liu algorithm (IEEE Trans.~Inform.~Theory, 1968) has been a mainstay for the learning of tree-structured graphical models from i.i.d.\ sampled data vectors. Its theoretical properties have been well-studied and are well-understood. In this paper, we focus on the class of trees that are arguably even more fundamental, namely {\em homogeneous} trees in which each pair of nodes that forms an edge has the same correlation $\rho$. We ask whether we are able to further reduce the error probability of learning the structure of the homogeneous tree model when {\em active learning} or {\em active sampling of nodes or variables} is allowed. Our figure of merit is the {\em error exponent}, which quantifies the exponential rate of decay of the error probability with an increasing number of data samples. At first sight, an improvement in the error exponent seems impossible, as all the edges are statistically identical. We design and analyze an algorithm Active Learning Algorithm for Trees with Homogeneous Edge (Active-LATHE), which surprisingly boosts the error exponent by at least 40\% when $\rho$ is at least $0.8$. For all other values of $\rho$, we also observe commensurate, but more modest, improvements in the error exponent. Our analysis hinges on judiciously exploiting the minute but detectable statistical variation of the samples to allocate more data to parts of the graph in which we are less confident of being correct.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge