Acquiring Common Sense Spatial Knowledge through Implicit Spatial Templates

Paper and Code

Nov 21, 2017

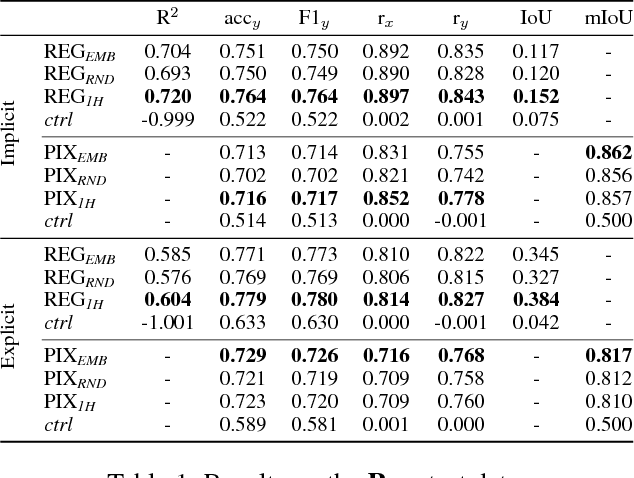

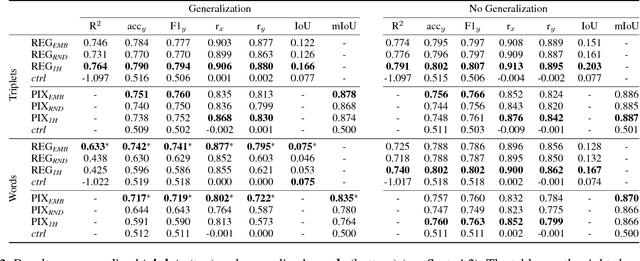

Spatial understanding is a fundamental problem with wide-reaching real-world applications. The representation of spatial knowledge is often modeled with spatial templates, i.e., regions of acceptability of two objects under an explicit spatial relationship (e.g., "on", "below", etc.). In contrast with prior work that restricts spatial templates to explicit spatial prepositions (e.g., "glass on table"), here we extend this concept to implicit spatial language, i.e., those relationships (generally actions) for which the spatial arrangement of the objects is only implicitly implied (e.g., "man riding horse"). In contrast with explicit relationships, predicting spatial arrangements from implicit spatial language requires significant common sense spatial understanding. Here, we introduce the task of predicting spatial templates for two objects under a relationship, which can be seen as a spatial question-answering task with a (2D) continuous output ("where is the man w.r.t. a horse when the man is walking the horse?"). We present two simple neural-based models that leverage annotated images and structured text to learn this task. The good performance of these models reveals that spatial locations are to a large extent predictable from implicit spatial language. Crucially, the models attain similar performance in a challenging generalized setting, where the object-relation-object combinations (e.g.,"man walking dog") have never been seen before. Next, we go one step further by presenting the models with unseen objects (e.g., "dog"). In this scenario, we show that leveraging word embeddings enables the models to output accurate spatial predictions, proving that the models acquire solid common sense spatial knowledge allowing for such generalization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge