Accelerating Trajectory Generation for Quadrotors Using Transformers

Paper and Code

Mar 27, 2023

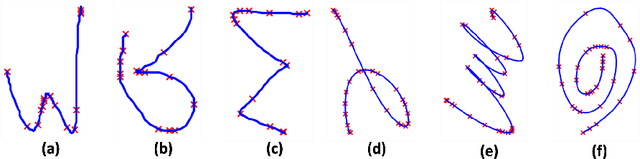

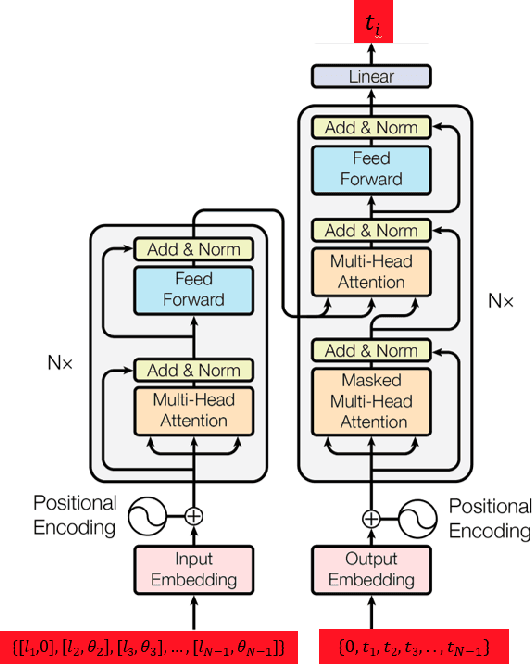

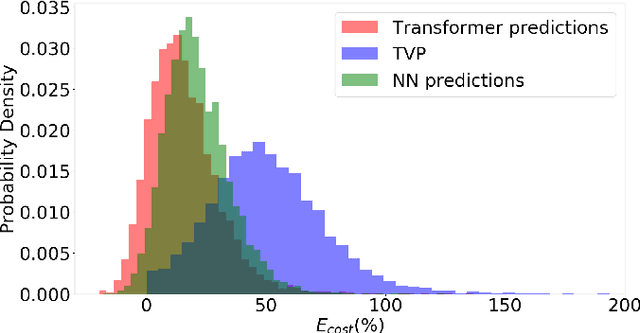

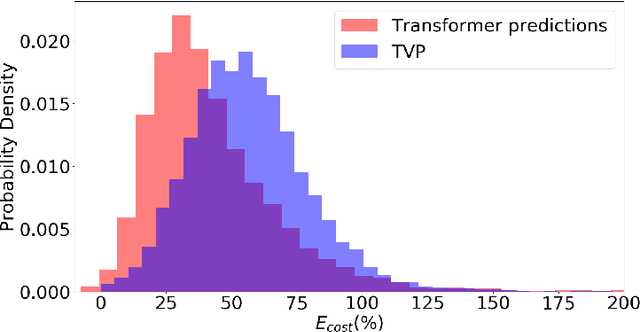

In this work, we address the problem of computation time for trajectory generation in quadrotors. Most trajectory generation methods for waypoint navigation of quadrotors, for example minimum snap/jerk and minimum-time, are structured as bi-level optimizations. The first level involves allocating time across all input waypoints and the second step is to minimize the snap/jerk of the trajectory under that time allocation. Such an optimization can be computationally expensive to solve. In our approach we treat trajectory generation as a supervised learning problem between a sequential set of inputs and outputs. We adapt a transformer model to learn the optimal time allocations for a given set of input waypoints, thus making it into a single step optimization. We demonstrate the performance of the transformer model by training it to predict the time allocations for a minimum snap trajectory generator. The trained transformer model is able to predict accurate time allocations with fewer data samples and smaller model size, compared to a feedforward network (FFN), demonstrating that it is able to model the sequential nature of the waypoint navigation problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge