Accelerating Multi-Model Inference by Merging DNNs of Different Weights

Paper and Code

Sep 28, 2020

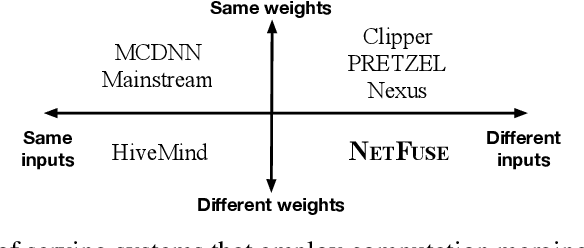

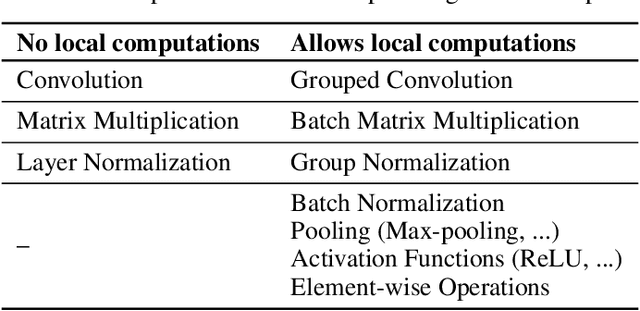

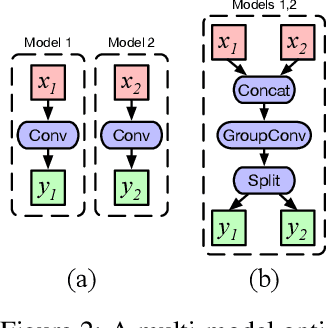

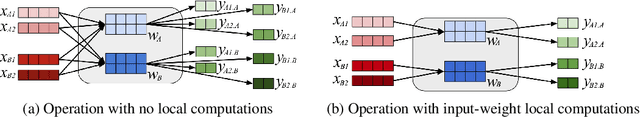

Standardized DNN models that have been proved to perform well on machine learning tasks are widely used and often adopted as-is to solve downstream tasks, forming the transfer learning paradigm. However, when serving multiple instances of such DNN models from a cluster of GPU servers, existing techniques to improve GPU utilization such as batching are inapplicable because models often do not share weights due to fine-tuning. We propose NetFuse, a technique of merging multiple DNN models that share the same architecture but have different weights and different inputs. NetFuse is made possible by replacing operations with more general counterparts that allow a set of weights to be associated with only a certain set of inputs. Experiments on ResNet-50, ResNeXt-50, BERT, and XLNet show that NetFuse can speed up DNN inference time up to 3.6x on a NVIDIA V100 GPU, and up to 3.0x on a TITAN Xp GPU when merging 32 model instances, while only using up a small additional amount of GPU memory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge