A Unified Framework for Lifelong Learning in Deep Neural Networks

Paper and Code

Nov 28, 2019

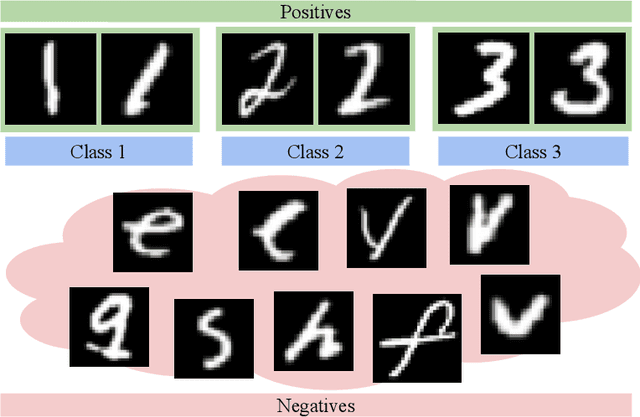

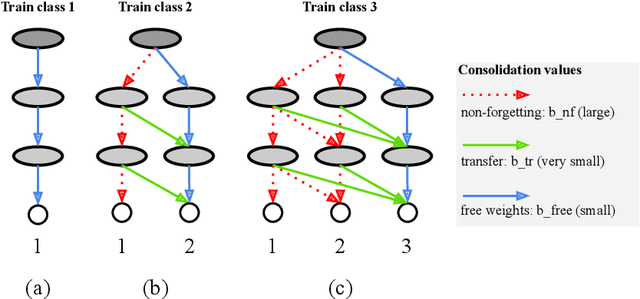

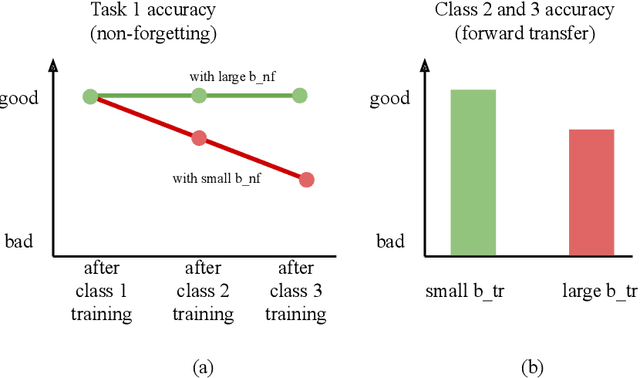

Humans can learn a variety of concepts and skills incrementally over the course of their lives while exhibiting an array of desirable properties, such as non-forgetting, concept rehearsal, forward transfer and backward transfer of knowledge, few-shot learning, and selective forgetting. Previous approaches to lifelong machine learning can only demonstrate subsets of these properties, often by combining multiple complex mechanisms. In this Perspective, we propose a powerful unified framework that can demonstrate all of the properties by utilizing a small number of weight consolidation parameters in deep neural networks. In addition, we are able to draw many parallels between the behaviours and mechanisms of our proposed framework and those surrounding human learning, such as memory loss or sleep deprivation. This Perspective serves as a conduit for two-way inspiration to further understand lifelong learning in machines and humans.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge