A theoretical treatment of conditional independence testing under Model-X

Paper and Code

May 12, 2020

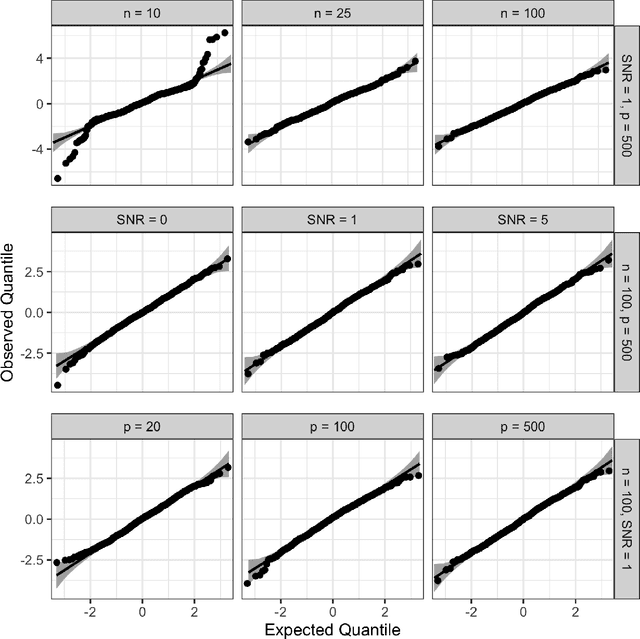

For testing conditional independence (CI) of a response $Y$ and a predictor $X$ given covariates $Z$, the recently introduced model-X (MX) framework has been the subject of active methodological research, especially in the context of MX knockoffs and their successful application to genome-wide association studies. In this paper, we build a theoretical foundation for the MX CI problem, yielding quantitative explanations for empirically observed phenomena and novel insights to guide the design of MX methodology. We focus our analysis on the conditional randomization test (CRT), whose validity conditional on $Y,Z$ allows us to view it as a test of a point null hypothesis involving the conditional distribution of $X$. We use the Neyman-Pearson lemma to derive an intuitive most-powerful CRT statistic against a point alternative as well as an analogous result for MX knockoffs. We define MX analogs of $t$- and $F$- tests and derive their power against local semiparametric alternatives using Le Cam's local asymptotic normality theory, explicitly capturing the prediction error of the underlying machine learning procedure. Importantly, all our results hold conditionally on $Y,Z$, almost surely in $Y,Z$. Finally, we define nonparametric notions of effect size and derive consistent estimators inspired by semiparametric statistics. Thus, this work forms explicit, and underexplored, bridges from MX to both classical statistics (testing) and modern causal inference (estimation).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge