A Survey on Surrogate-assisted Efficient Neural Architecture Search

Paper and Code

Jun 03, 2022

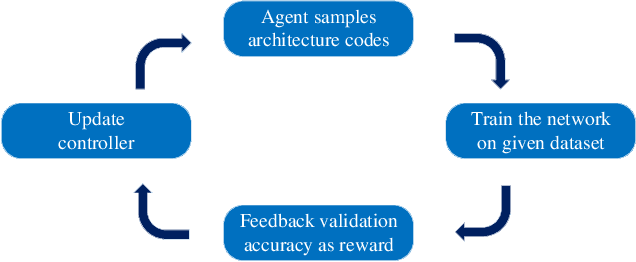

Neural architecture search (NAS) has become increasingly popular in the deep learning community recently, mainly because it can provide an opportunity to allow interested users without rich expertise to benefit from the success of deep neural networks (DNNs). However, NAS is still laborious and time-consuming because a large number of performance estimations are required during the search process of NAS, and training DNNs is computationally intensive. To solve the major limitation of NAS, improving the efficiency of NAS is essential in the design of NAS. This paper begins with a brief introduction to the general framework of NAS. Then, the methods for evaluating network candidates under the proxy metrics are systematically discussed. This is followed by a description of surrogate-assisted NAS, which is divided into three different categories, namely Bayesian optimization for NAS, surrogate-assisted evolutionary algorithms for NAS, and MOP for NAS. Finally, remaining challenges and open research questions are discussed, and promising research topics are suggested in this emerging field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge