A Survey of Embodied AI: From Simulators to Research Tasks

Paper and Code

Mar 14, 2021

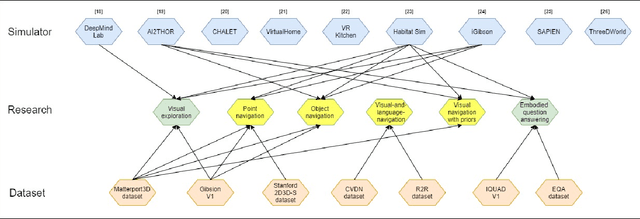

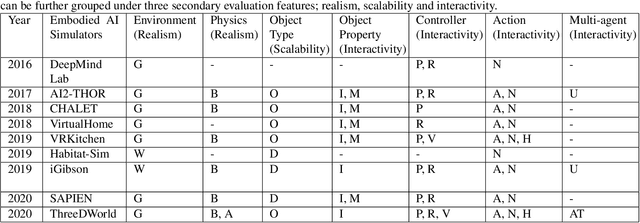

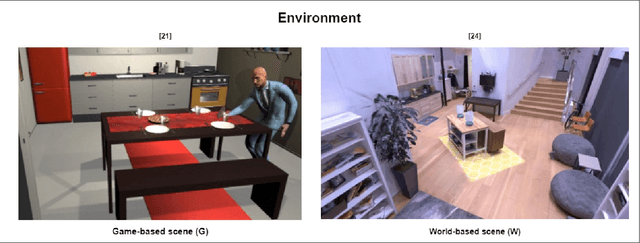

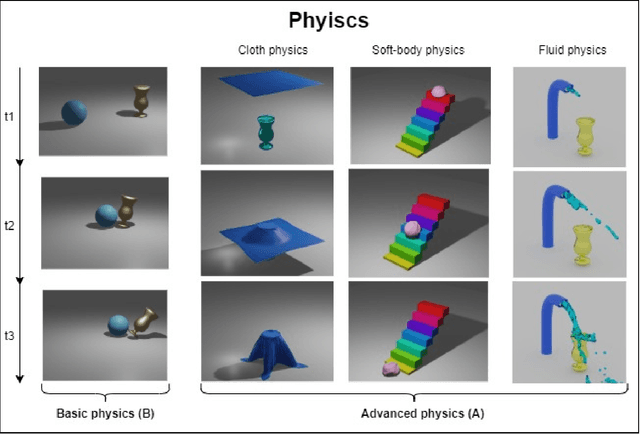

There has been an emerging paradigm shift from the era of "internet AI" to "embodied AI", whereby AI algorithms and agents no longer simply learn from datasets of images, videos or text curated primarily from the internet. Instead, they learn through embodied physical interactions with their environments, whether real or simulated. Consequently, there has been substantial growth in the demand for embodied AI simulators to support a diversity of embodied AI research tasks. This growing interest in embodied AI is beneficial to the greater pursuit of artificial general intelligence, but there is no contemporary and comprehensive survey of this field. This paper comprehensively surveys state-of-the-art embodied AI simulators and research, mapping connections between these. By benchmarking nine state-of-the-art embodied AI simulators in terms of seven features, this paper aims to understand the simulators in their provision for use in embodied AI research. Finally, based upon the simulators and a pyramidal hierarchy of embodied AI research tasks, this paper surveys the main research tasks in embodied AI -- visual exploration, visual navigation and embodied question answering (QA), covering the state-of-the-art approaches, evaluation and datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge