A study of traits that affect learnability in GANs

Paper and Code

Nov 27, 2020

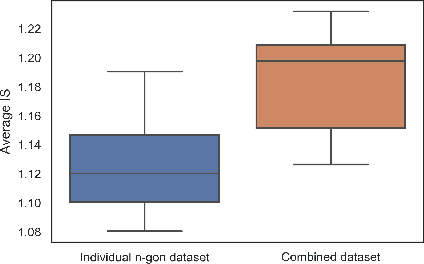

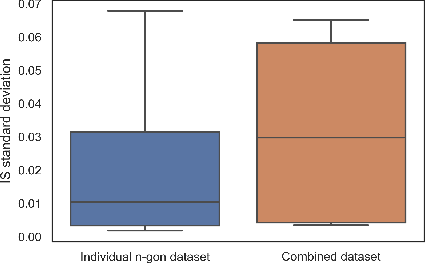

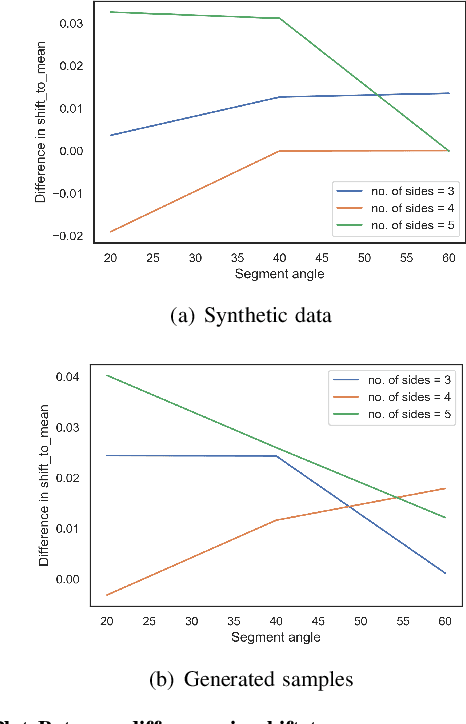

Generative Adversarial Networks GANs are algorithmic architectures that use two neural networks, pitting one against the opposite so as to come up with new, synthetic instances of data that can pass for real data. Training a GAN is a challenging problem which requires us to apply advanced techniques like hyperparameter tuning, architecture engineering etc. Many different losses, regularization and normalization schemes, network architectures have been proposed to solve this challenging problem for different types of datasets. It becomes necessary to understand the experimental observations and deduce a simple theory for it. In this paper, we perform empirical experiments using parameterized synthetic datasets to probe what traits affect learnability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge