A Study of the Human Perception of Synthetic Faces

Paper and Code

Nov 08, 2021

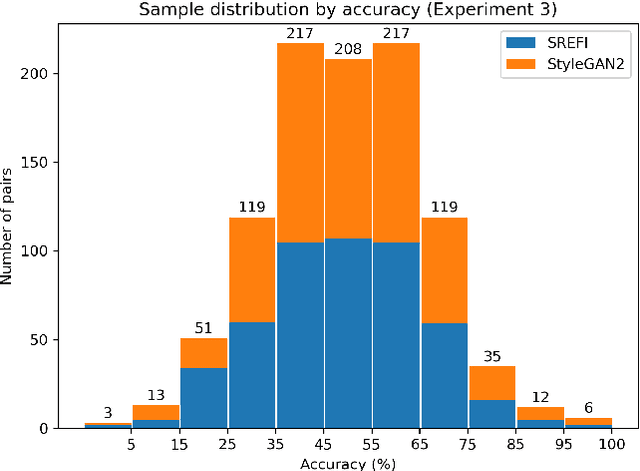

Advances in face synthesis have raised alarms about the deceptive use of synthetic faces. Can synthetic identities be effectively used to fool human observers? In this paper, we introduce a study of the human perception of synthetic faces generated using different strategies including a state-of-the-art deep learning-based GAN model. This is the first rigorous study of the effectiveness of synthetic face generation techniques grounded in experimental techniques from psychology. We answer important questions such as how often do GAN-based and more traditional image processing-based techniques confuse human observers, and are there subtle cues within a synthetic face image that cause humans to perceive it as a fake without having to search for obvious clues? To answer these questions, we conducted a series of large-scale crowdsourced behavioral experiments with different sources of face imagery. Results show that humans are unable to distinguish synthetic faces from real faces under several different circumstances. This finding has serious implications for many different applications where face images are presented to human users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge