A Stochastic Neural Network for Attack-Agnostic Adversarial Robustness

Paper and Code

Oct 17, 2020

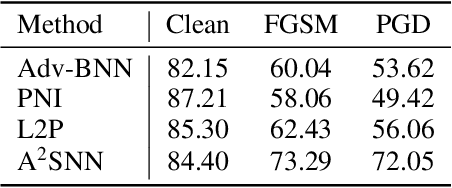

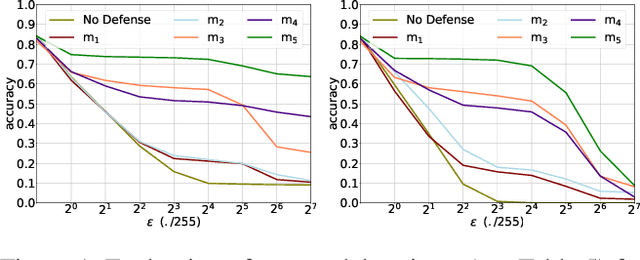

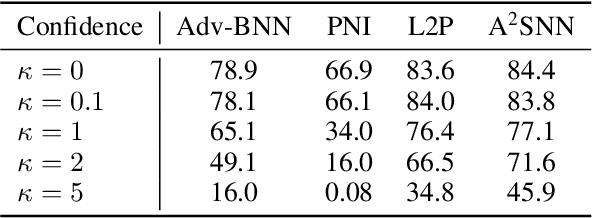

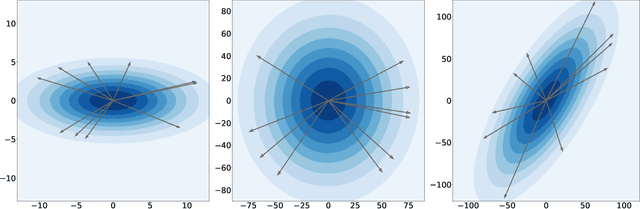

Stochastic Neural Networks (SNNs) that inject noise into their hidden layers have recently been shown to achieve strong robustness against adversarial attacks. However, existing SNNs are usually heuristically motivated, and further rely on adversarial training, which is computationally costly and biases models' defense towards a specific attack. We propose a new SNN that achieves state-of-the-art performance without relying on adversarial training, and enjoys solid theoretical justification. Specifically, while existing SNNs inject learned or hand-tuned isotropic noise, our SNN learns an anisotropic noise distribution to optimize a learning-theoretic bound on adversarial robustness. We evaluate our method on three benchmarks (CIFAR-10, SVHN, F-MNIST), show that it can be applied to different architectures (ResNet-18, LeNet++), and that it provides robustness to a variety of white-box and black-box attacks, while being simple and fast to train compared to existing alternatives. The source code is openly available on GitHub: https://github.com/peustr/A2SNN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge