A Spiking Neural Learning Classifier System

Paper and Code

Jan 16, 2012

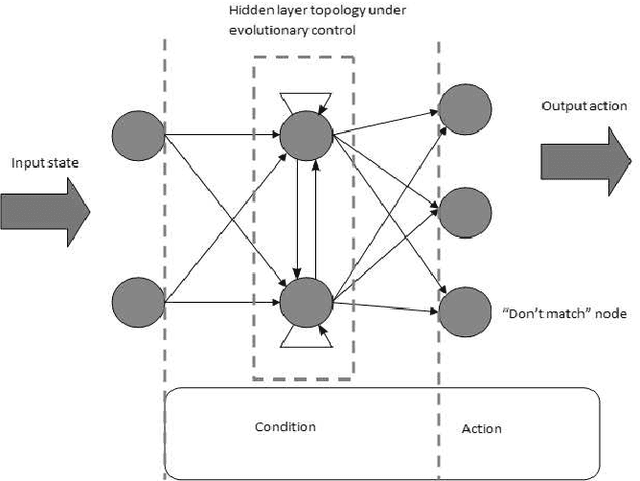

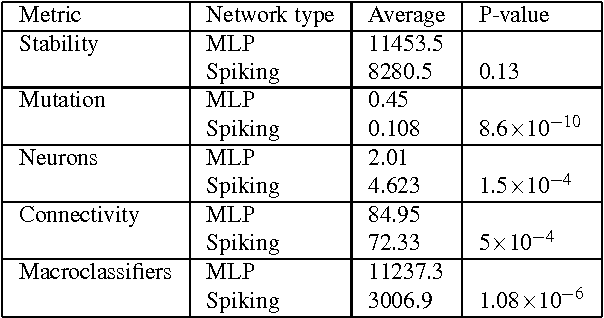

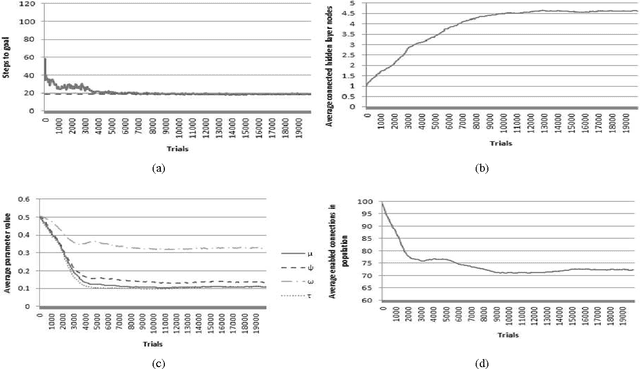

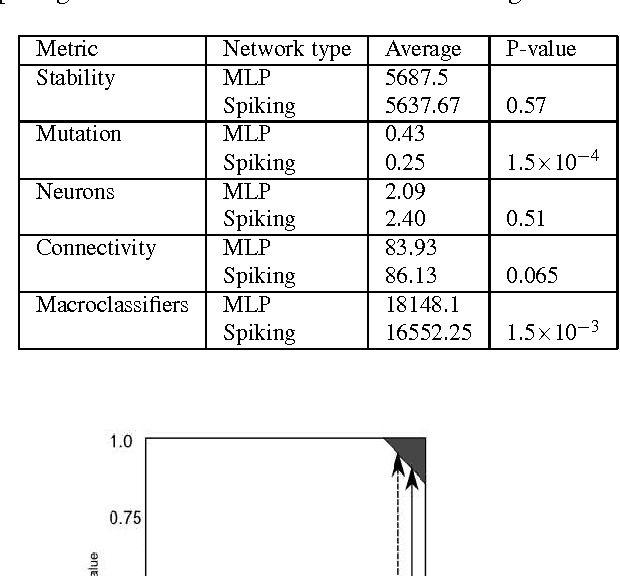

Learning Classifier Systems (LCS) are population-based reinforcement learners used in a wide variety of applications. This paper presents a LCS where each traditional rule is represented by a spiking neural network, a type of network with dynamic internal state. We employ a constructivist model of growth of both neurons and dendrites that realise flexible learning by evolving structures of sufficient complexity to solve a well-known problem involving continuous, real-valued inputs. Additionally, we extend the system to enable temporal state decomposition. By allowing our LCS to chain together sequences of heterogeneous actions into macro-actions, it is shown to perform optimally in a problem where traditional methods can fail to find a solution in a reasonable amount of time. Our final system is tested on a simulated robotics platform.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge