A Simple Approach to Jointly Rank Passages and Select Relevant Sentences in the OBQA Context

Paper and Code

Sep 22, 2021

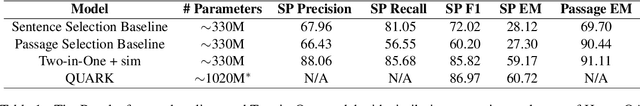

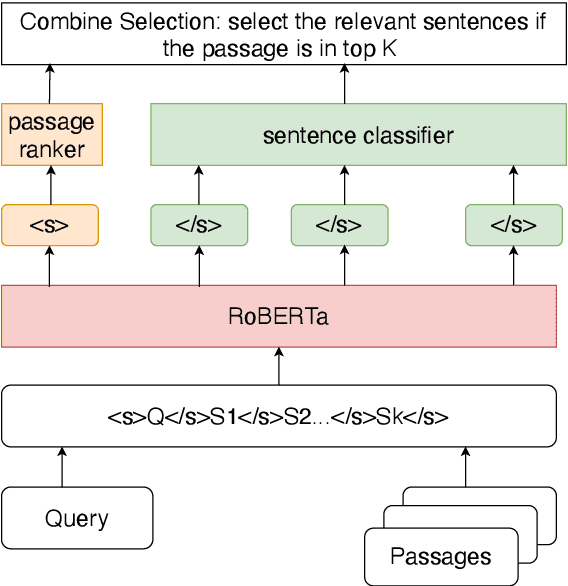

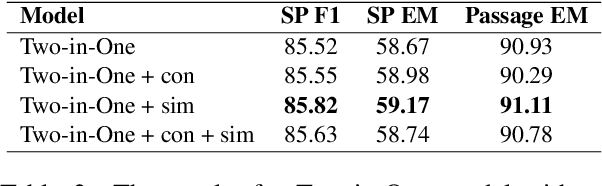

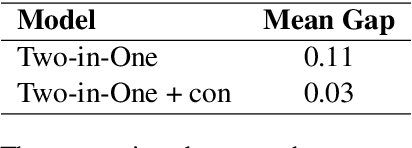

In the open question answering (OBQA) task, how to select the relevant information from a large corpus is a crucial problem for reasoning and inference. Some datasets (e.g, HotpotQA) mainly focus on testing the model's reasoning ability at the sentence level. To overcome this challenge, many existing frameworks use a deep learning model to select relevant passages and then answer each question by matching a sentence in the corresponding passage. However, such frameworks require long inference time and fail to take advantage of the relationship between passages and sentences. In this work, we present a simple yet effective framework to address these problems by jointly ranking passages and selecting sentences. We propose consistency and similarity constraints to promote the correlation and interaction between passage ranking and sentence selection. In our experiments, we demonstrate that our framework can achieve competitive results and outperform the baseline by 28\% in terms of exact matching of relevant sentences on the HotpotQA dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge