A Self-Supervised, Differentiable Kalman Filter for Uncertainty-Aware Visual-Inertial Odometry

Paper and Code

Mar 14, 2022

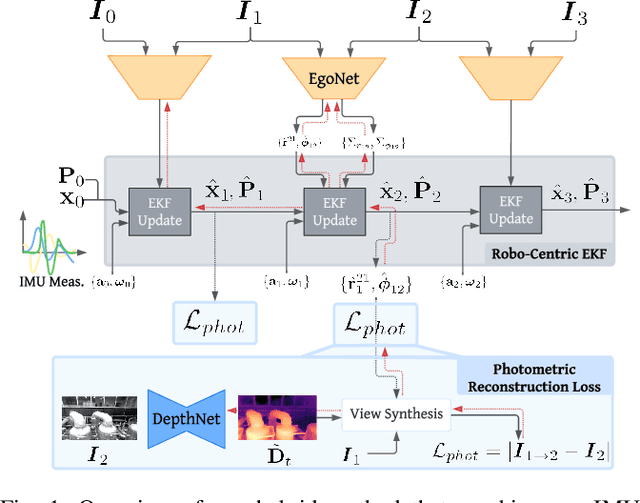

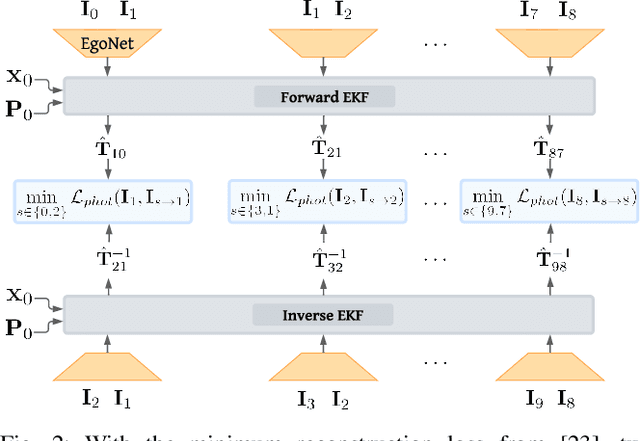

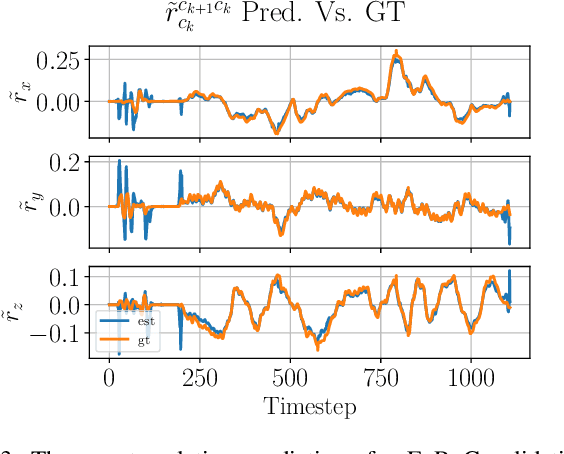

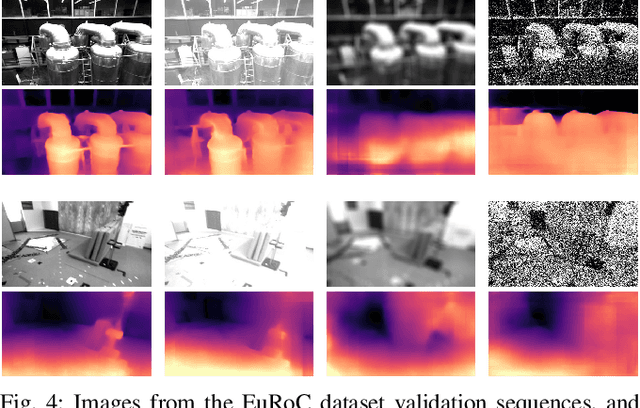

Traditionally, visual-inertial-odometry (VIO) systems rely on filtering or optimization-based frameworks for robot egomotion estimation. While these methods are accurate under nominal conditions, they are prone to failure in degraded environments, where illumination changes, fast camera motion, or textureless scenes are present. Learning-based systems have the potential to outperform classical implementations in degraded environments, but are, currently, less accurate than classical methods in nominal settings. A third class, of hybrid systems, attempts to leverage the advantages of both systems. Herein, we introduce a framework for training a hybrid VIO system. Our approach uses a differentiable Kalman filter with an IMU-based process model and a robust, neural network-based relative pose measurement model. By utilizing the data efficiency of self-supervised learning, we show that our system significantly outperforms a similar, supervised system, while enabling online retraining. To demonstrate the utility of our approach, we evaluate our system on a visually degraded version of the EuRoC dataset. Notably, we find that, in cases where classical estimators consistently diverge, our estimator does not diverge or suffer from a significant reduction in accuracy. Finally, our system, by properly utilizing the metric information contained in the IMU measurements, is able to recover metric scale, while other self-supervised monocular VIO approaches cannot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge