A Scalable Multilabel Classification to Deploy Deep Learning Architectures For Edge Devices

Paper and Code

Nov 07, 2019

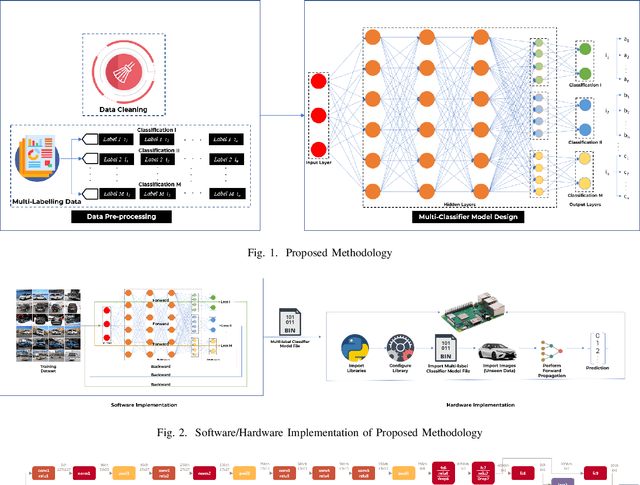

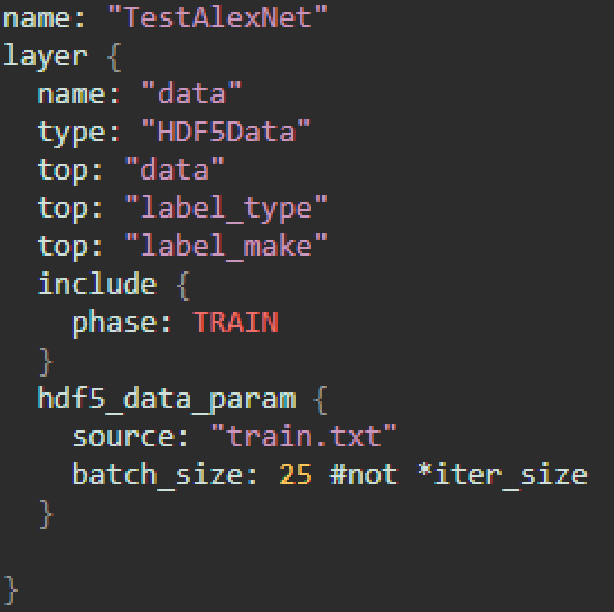

Convolution Neural Networks (CNN) have performed well in many applications such as object detection, pattern recognition, video surveillance and so on. CNN carryout feature extraction on labelled data to perform classification. Multi-label classification assigns more than one label to a particular data sample in a data set. In multi-label classification, properties of a data point that are considered to be mutually exclusive are classified. However, existing multi-label classification requires some form of data pre-processing that involves image training data cropping or image tiling. The computation and memory requirement of these multi-label CNN models makes their deployment on edge devices challenging. In this paper, we propose a methodology that solves this problem by extending the capability of existing multi-label classification and provide models with lower latency that requires smaller memory size when deployed on edge devices. We make use of a single CNN model designed with multiple loss layers and multiple accuracy layers. This methodology is tested on state-of-the-art deep learning algorithms such as AlexNet, GoogleNet and SqueezeNet using the Stanford Cars Dataset and deployed on Raspberry Pi3. From the results the proposed methodology achieves comparable accuracy with 1.8x less MACC operation, 0.97x reduction in latency and 0.5x, 0.84x and 0.97x reduction in size for the generated AlexNet, GoogleNet and SqueezeNet CNN models respectively when compared to conventional ways of achieving multi-label classification like hard-coding multi-label instances into single labels. The methodology also yields CNN models that achieve 50\% less MACC operations, 50% reduction in latency and size of generated versions of AlexNet, GoogleNet and SqueezeNet respectively when compared to conventional ways using 2 different single-labelled models to achieve multi-label classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge