A Safety-Oriented Self-Learning Algorithm for Autonomous Driving: Evolution Starting from a Basic Model

Paper and Code

Aug 22, 2024

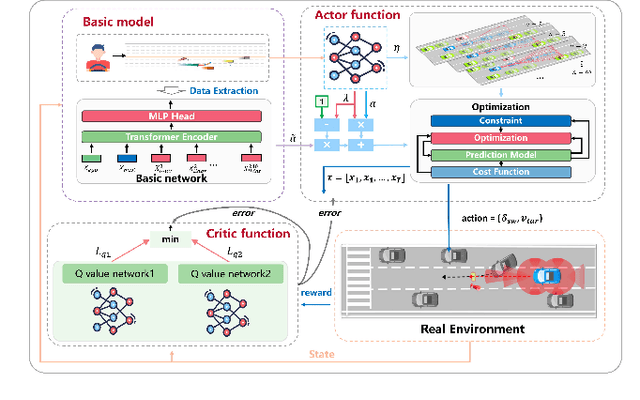

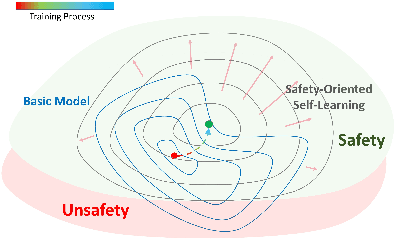

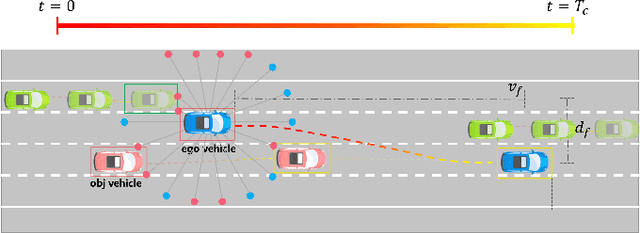

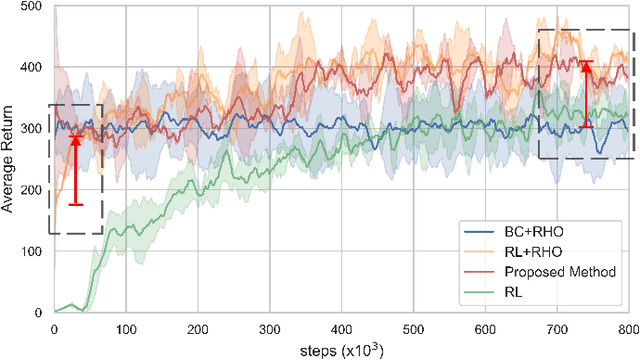

Autonomous driving vehicles with self-learning capabilities are expected to evolve in complex environments to improve their ability to cope with different scenarios. However, most self-learning algorithms suffer from low learning efficiency and lacking safety, which limits their applications. This paper proposes a safety-oriented self-learning algorithm for autonomous driving, which focuses on how to achieve evolution from a basic model. Specifically, a basic model based on the transformer encoder is designed to extract and output policy features from a small number of demonstration trajectories. To improve the learning efficiency, a policy mixed approach is developed. The basic model provides initial values to improve exploration efficiency, and the self-learning algorithm enhances the adaptability and generalization of the model, enabling continuous improvement without external intervention. Finally, an actor approximator based on receding horizon optimization is designed considering the constraints of the environmental input to ensure safety. The proposed method is verified in a challenging mixed traffic environment with pedestrians and vehicles. Simulation and real-vehicle test results show that the proposed method can safely and efficiently learn appropriate autonomous driving behaviors. Compared reinforcement learning and behavior cloning methods, it can achieve comprehensive improvement in learning efficiency and performance under the premise of ensuring safety.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge