A Real-time and Hardware Efficient Artfecat-free Spike Sorting Using Deep Spike Detection

Paper and Code

Apr 19, 2025

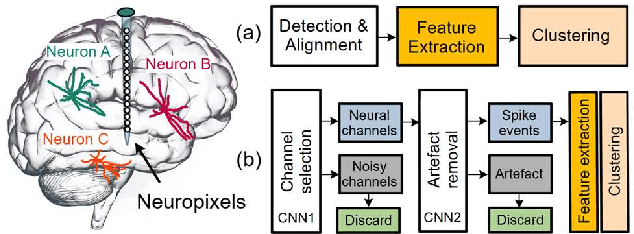

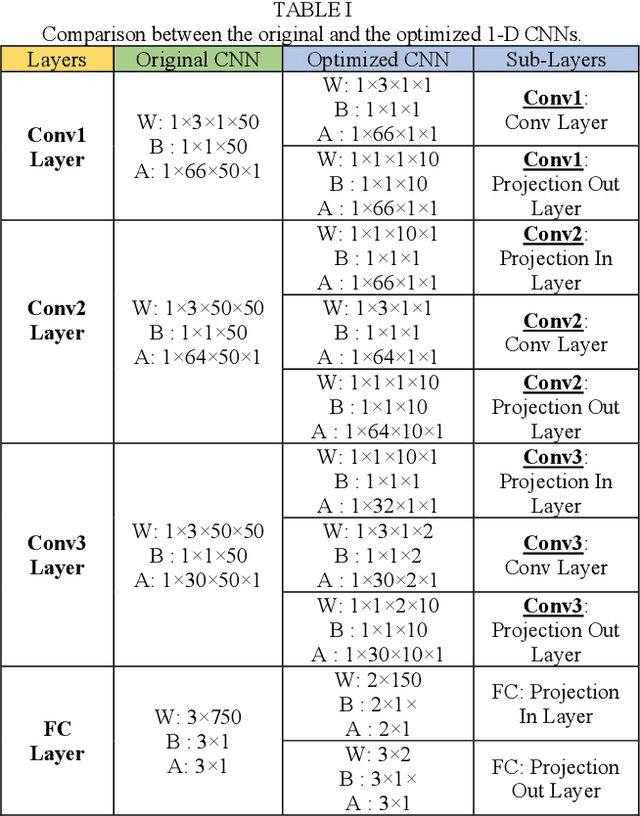

Spike sorting is a valuable tool in understanding brain regions. It assigns detected spike waveforms to their origins, helping to research the mechanism of the human brain and the development of implantable brain-machine interfaces (iBMIs). The presence of noise and artefacts will adversely affect the efficacy of spike sorting. This paper proposes a framework for low-cost and real-time implementation of deep spike detection, which consists of two one-dimensional (1-D) convolutional neural network (CNN) model for channel selection and artefact removal. The framework utilizes simulation and hardware layers, and it applies several low-power techniques to optimise the implementation cost of a 1-D CNN model. A compact CNN model with 210 bytes memory size is achieved using structured pruning, network projection and quantization in the simulation layer. The hardware layer also accommodates various techniques including a customized multiply-accumulate (MAC) engine, novel fused layers in the convolution pipeline and proposing flexible resource allocation for a power-efficient and low-delay design. The optimized 1-D CNN significantly decreases both computational complexity and model size, with only a minimal reduction in accuracy. Classification of 1-D CNN on the Cyclone V 5CSEMA5F31C6 FPGA evaluation platform is accomplished in just 16.8 microseconds at a frequency of 2.5 MHz. The FPGA prototype achieves an accuracy rate of 97.14% on a standard dataset and operates with a power consumption of 2.67mW from a supply voltage of 1.1 volts. An accuracy of 95.05% is achieved with a power of 5.6mW when deep spike detection is implemented using two optimized 1-D CNNs on an FPGA board.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge