A Randomised Subspace Gauss-Newton Method for Nonlinear Least-Squares

Paper and Code

Nov 10, 2022

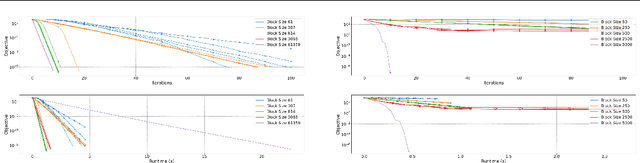

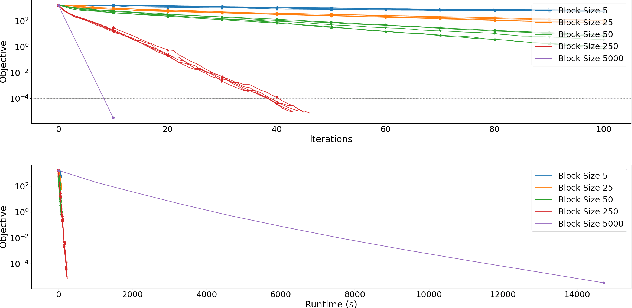

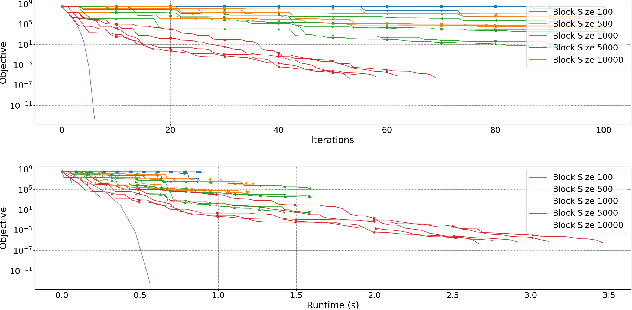

We propose a Randomised Subspace Gauss-Newton (R-SGN) algorithm for solving nonlinear least-squares optimization problems, that uses a sketched Jacobian of the residual in the variable domain and solves a reduced linear least-squares on each iteration. A sublinear global rate of convergence result is presented for a trust-region variant of R-SGN, with high probability, which matches deterministic counterpart results in the order of the accuracy tolerance. Promising preliminary numerical results are presented for R-SGN on logistic regression and on nonlinear regression problems from the CUTEst collection.

* In Thirty-seventh International Conference on Machine Learning,

2020. In Workshop on Beyond First Order Methods in ML Systems * This work first appears in Thirty-seventh International Conference on

Machine Learning, 2020, in Workshop on Beyond First Order Methods in ML

Systems.

https://sites.google.com/view/optml-icml2020/accepted-papers?authuser=0.

arXiv admin note: text overlap with arXiv:2206.03371

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge