A Probabilistic Approach for Alignment with Human Comparisons

Paper and Code

Mar 16, 2024

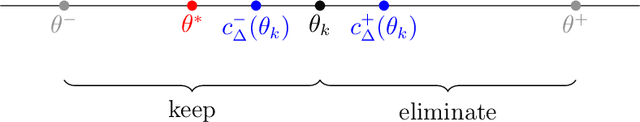

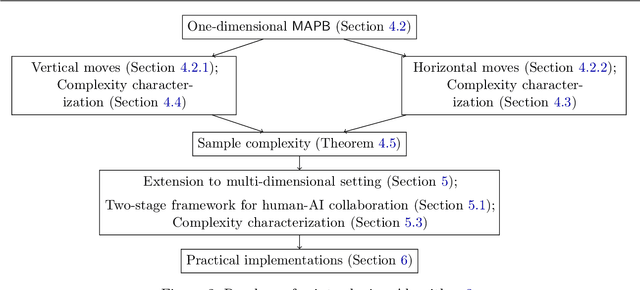

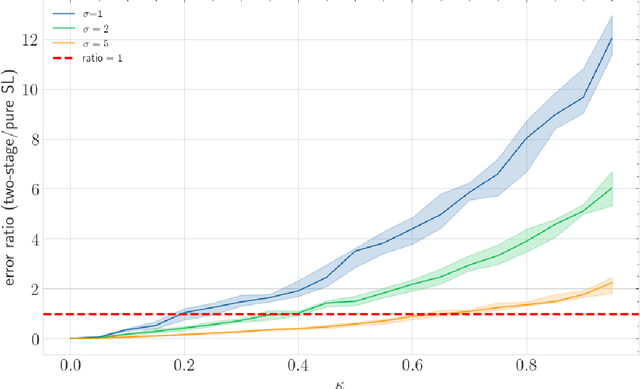

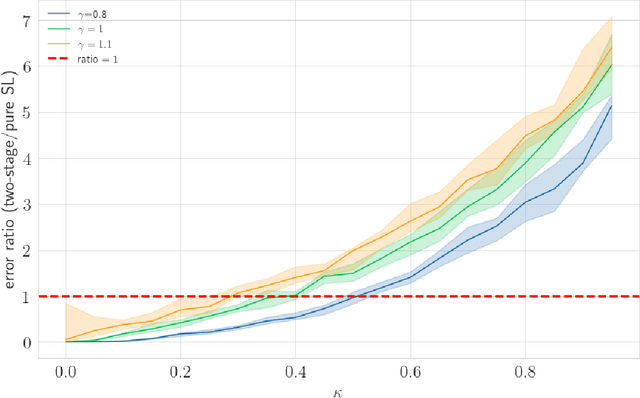

A growing trend involves integrating human knowledge into learning frameworks, leveraging subtle human feedback to refine AI models. Despite these advances, no comprehensive theoretical framework describing the specific conditions under which human comparisons improve the traditional supervised fine-tuning process has been developed. To bridge this gap, this paper studies the effective use of human comparisons to address limitations arising from noisy data and high-dimensional models. We propose a two-stage "Supervised Fine Tuning+Human Comparison" (SFT+HC) framework connecting machine learning with human feedback through a probabilistic bisection approach. The two-stage framework first learns low-dimensional representations from noisy-labeled data via an SFT procedure, and then uses human comparisons to improve the model alignment. To examine the efficacy of the alignment phase, we introduce a novel concept termed the "label-noise-to-comparison-accuracy" (LNCA) ratio. This paper theoretically identifies the conditions under which the "SFT+HC" framework outperforms pure SFT approach, leveraging this ratio to highlight the advantage of incorporating human evaluators in reducing sample complexity. We validate that the proposed conditions for the LNCA ratio are met in a case study conducted via an Amazon Mechanical Turk experiment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge