A Novel Neural Sequence Model with Multiple Attentions for Word Sense Disambiguation

Paper and Code

Sep 04, 2018

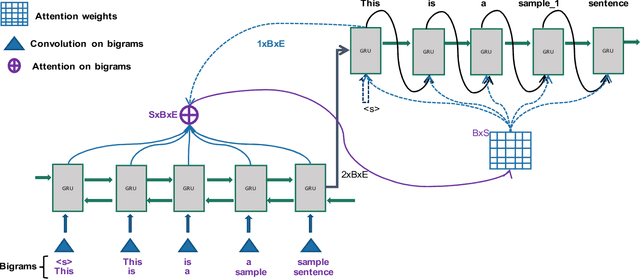

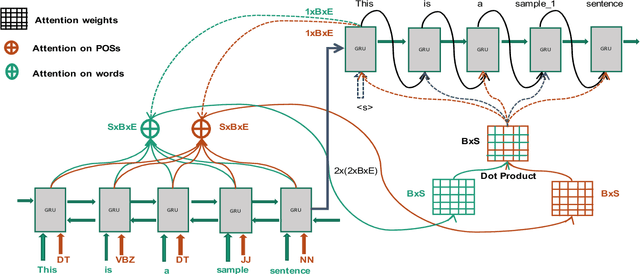

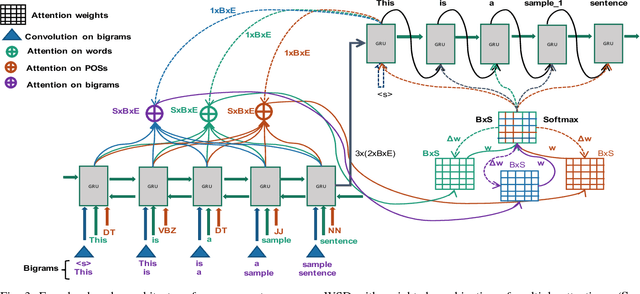

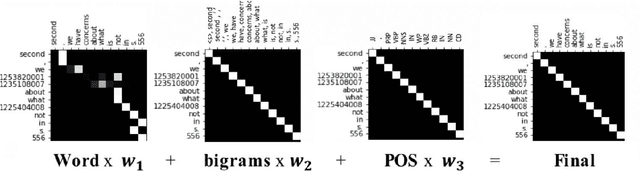

Word sense disambiguation (WSD) is a well researched problem in computational linguistics. Different research works have approached this problem in different ways. Some state of the art results that have been achieved for this problem are by supervised models in terms of accuracy, but they often fall behind flexible knowledge-based solutions which use engineered features as well as human annotators to disambiguate every target word. This work focuses on bridging this gap using neural sequence models incorporating the well-known attention mechanism. The main gist of our work is to combine multiple attentions on different linguistic features through weights and to provide a unified framework for doing this. This weighted attention allows the model to easily disambiguate the sense of an ambiguous word by attending over a suitable portion of a sentence. Our extensive experiments show that multiple attention enables a more versatile encoder-decoder model leading to state of the art results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge