A novel Deep Neural Network architecture for non-linear system identification

Paper and Code

Jun 06, 2021

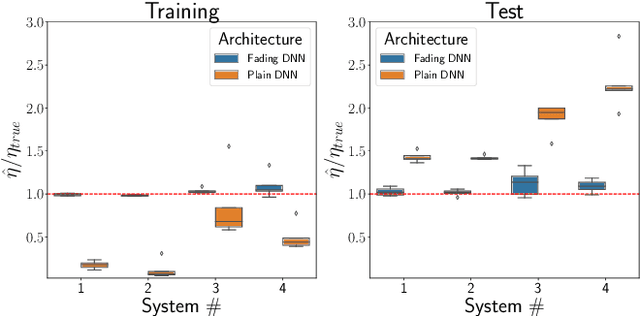

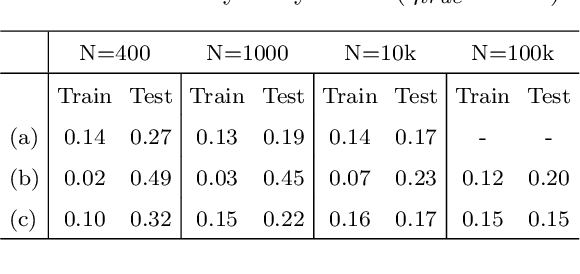

We present a novel Deep Neural Network (DNN) architecture for non-linear system identification. We foster generalization by constraining DNN representational power. To do so, inspired by fading memory systems, we introduce inductive bias (on the architecture) and regularization (on the loss function). This architecture allows for automatic complexity selection based solely on available data, in this way the number of hyper-parameters that must be chosen by the user is reduced. Exploiting the highly parallelizable DNN framework (based on Stochastic optimization methods) we successfully apply our method to large scale datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge