A Novel Automation-Assisted Cervical Cancer Reading Method Based on Convolutional Neural Network

Paper and Code

Dec 14, 2019

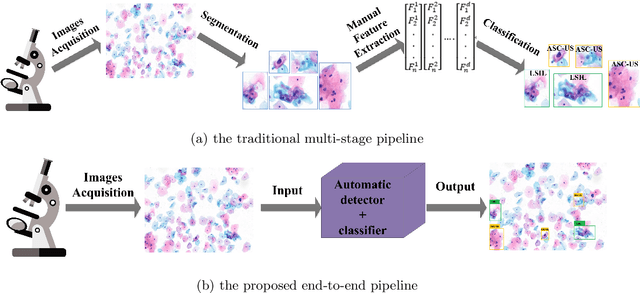

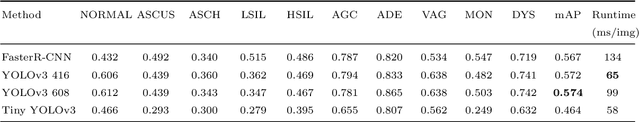

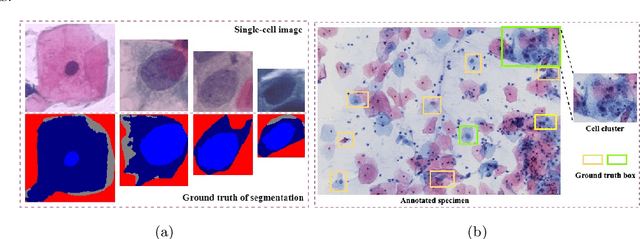

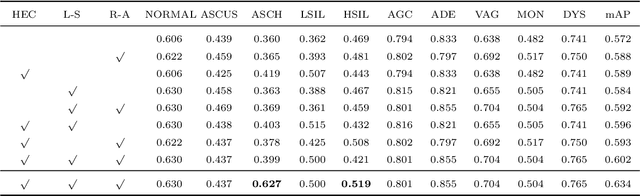

While most previous automation-assisted reading methods can improve efficiency, their performance often relies on the success of accurate cell segmentation and hand-craft feature extraction. This paper presents an efficient and totally segmentation-free method for automated cervical cell screening that utilizes modern object detector to directly detect cervical cells or clumps, without the design of specific hand-crafted feature. Specifically, we use the state-of-the-art CNN-based object detection methods, YOLOv3, as our baseline model. In order to improve the classification performance of hard examples which are four highly similar categories, we cascade an additional task-specific classifier. We also investigate the presence of unreliable annotations and cope with them by smoothing the distribution of noisy labels. We comprehensively evaluate our methods on test set which is consisted of 1,014 annotated cervical cell images with size of 4000*3000 and complex cellular situation corresponding to 10 categories. Our model achieves 97.5% sensitivity (Sens) and 67.8% specificity (Spec) on cervical cell image-level screening. Moreover, we obtain a mean Average Precision (mAP) of 63.4% on cervical cell-level diagnosis, and improve the Average Precision (AP) of hard examples which are valuable but difficult to distinguish. Our automation-assisted cervical cell reading method not only achieves cervical cell image-level classification but also provides more detailed location and category information of abnormal cells. The results indicate feasible performance of our method, together with the efficiency and robustness, providing a new idea for future development of computer-assisted reading system in clinical cervical screening.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge