A Multi-Modal Federated Learning Framework for Remote Sensing Image Classification

Paper and Code

Mar 13, 2025

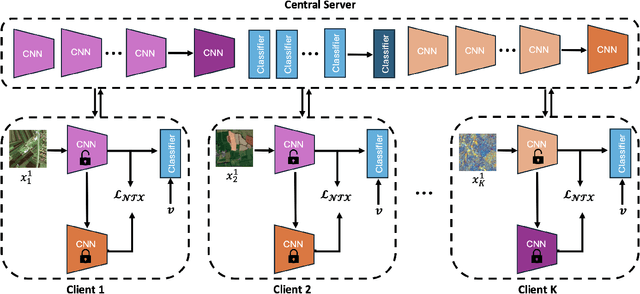

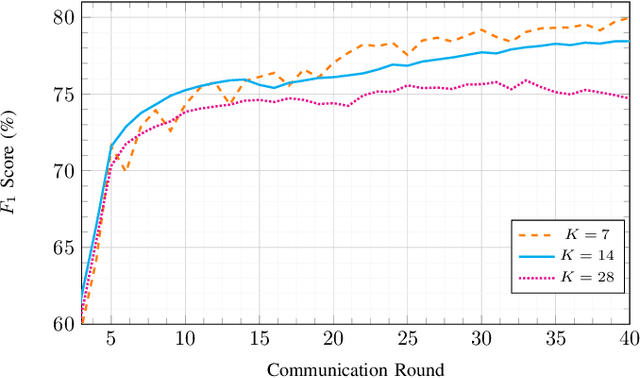

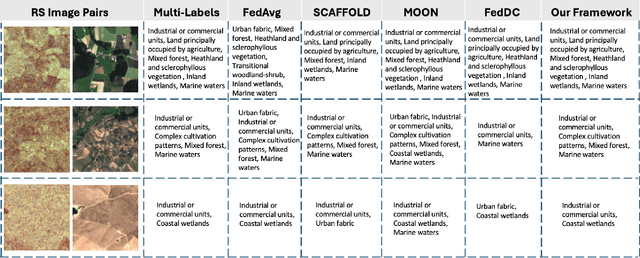

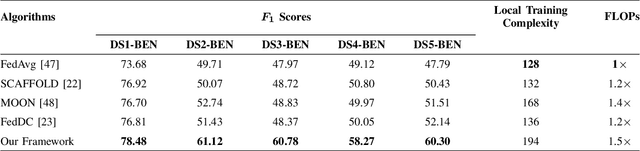

Federated learning (FL) enables the collaborative training of deep neural networks across decentralized data archives (i.e., clients) without sharing the local data of the clients. Most of the existing FL methods assume that the data distributed across all clients is associated with the same data modality. However, remote sensing (RS) images present in different clients can be associated with diverse data modalities. The joint use of the multi-modal RS data can significantly enhance classification performance. To effectively exploit decentralized and unshared multi-modal RS data, our paper introduces a novel multi-modal FL framework for RS image classification problems. The proposed framework comprises three modules: 1) multi-modal fusion (MF); 2) feature whitening (FW); and 3) mutual information maximization (MIM). The MF module employs iterative model averaging to facilitate learning without accessing multi-modal training data on clients. The FW module aims to address the limitations of training data heterogeneity by aligning data distributions across clients. The MIM module aims to model mutual information by maximizing the similarity between images from different modalities. For the experimental analyses, we focus our attention on multi-label classification and pixel-based classification tasks in RS. The results obtained using two benchmark archives show the effectiveness of the proposed framework when compared to state-of-the-art algorithms in the literature. The code of the proposed framework will be available at https://git.tu-berlin.de/rsim/multi-modal-FL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge