A Memory-Efficient Dynamic Image Reconstruction Method using Neural Fields

Paper and Code

May 11, 2022

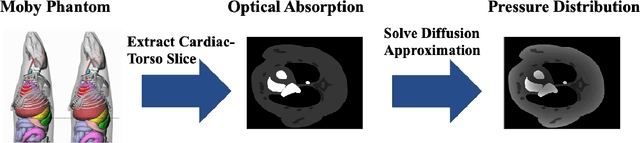

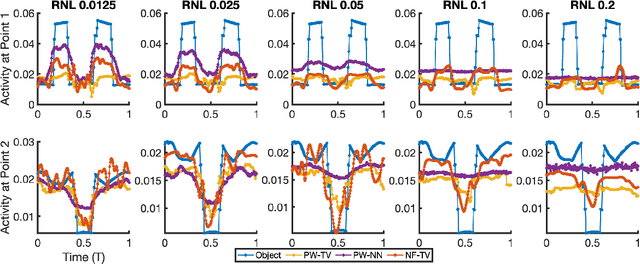

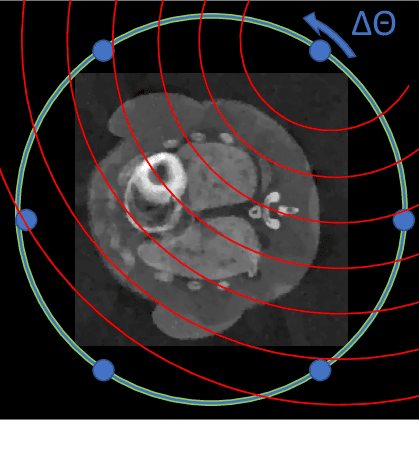

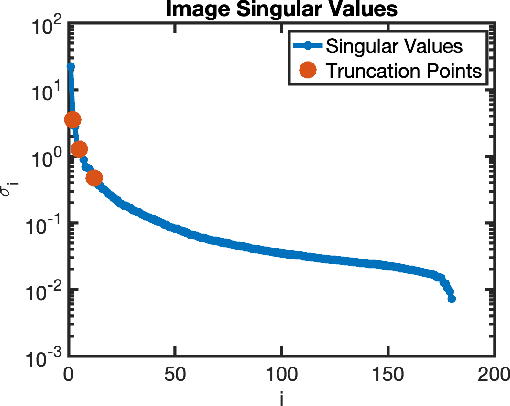

Dynamic imaging is essential for analyzing various biological systems and behaviors but faces two main challenges: data incompleteness and computational burden. For many imaging systems, high frame rates and short acquisition times require severe undersampling, which leads to data incompleteness. Multiple images may then be compatible with the data, thus requiring special techniques (regularization) to ensure the uniqueness of the reconstruction. Computational and memory requirements are particularly burdensome for three-dimensional dynamic imaging applications requiring high resolution in both space and time. Exploiting redundancies in the object's spatiotemporal features is key to addressing both challenges. This contribution investigates neural fields, or implicit neural representations, to model the sought-after dynamic object. Neural fields are a particular class of neural networks that represent the dynamic object as a continuous function of space and time, thus avoiding the burden of storing a full resolution image at each time frame. Neural field representation thus reduces the image reconstruction problem to estimating the network parameters via a nonlinear optimization problem (training). Once trained, the neural field can be evaluated at arbitrary locations in space and time, allowing for high-resolution rendering of the object. Key advantages of the proposed approach are that neural fields automatically learn and exploit redundancies in the sought-after object to both regularize the reconstruction and significantly reduce memory storage requirements. The feasibility of the proposed framework is illustrated with an application to dynamic image reconstruction from severely undersampled circular Radon transform data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge