A Machine Learning Approach to Sensor Substitution for Non-Prehensile Manipulation

Paper and Code

Feb 13, 2025

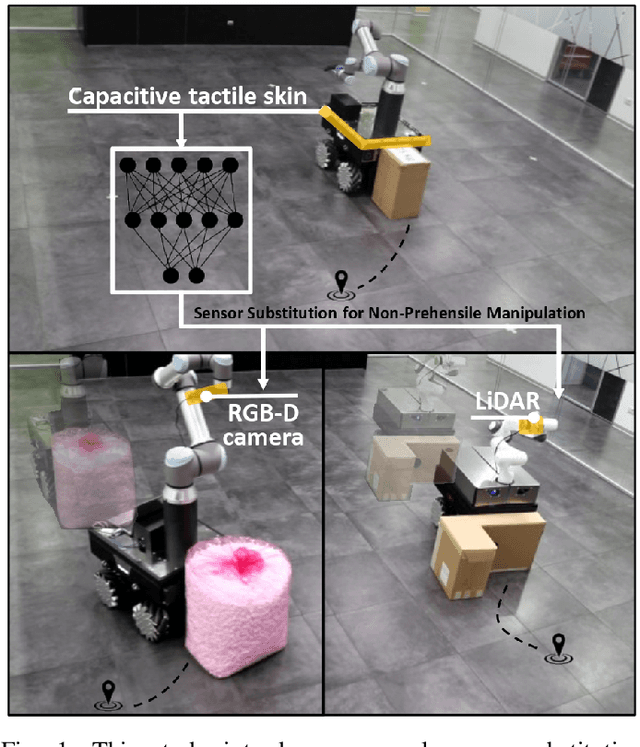

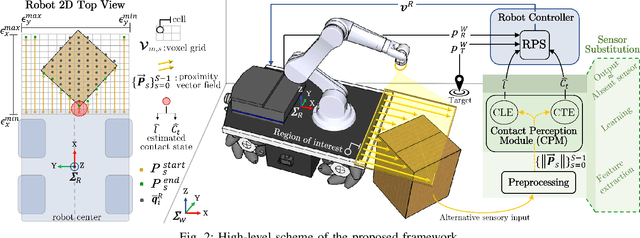

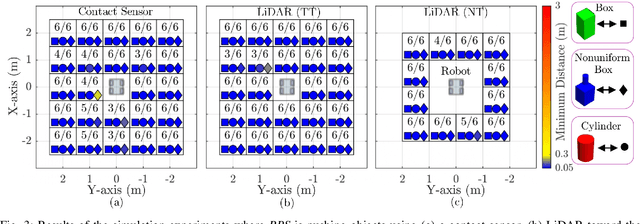

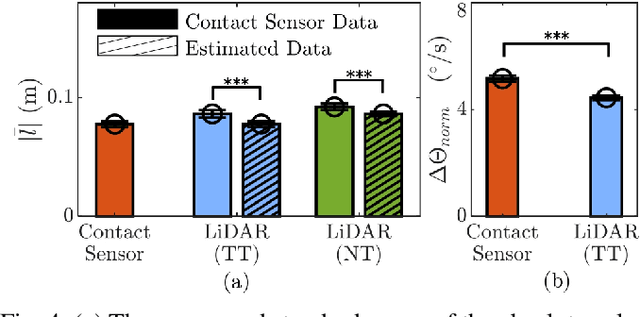

Mobile manipulators are increasingly deployed in complex environments, requiring diverse sensors to perceive and interact with their surroundings. However, equipping every robot with every possible sensor is often impractical due to cost and physical constraints. A critical challenge arises when robots with differing sensor capabilities need to collaborate or perform similar tasks. For example, consider a scenario where a mobile manipulator equipped with high-resolution tactile skin is skilled at non-prehensile manipulation tasks like pushing. If this robot needs to be replaced or augmented by a robot lacking such tactile sensing, the learned manipulation policies become inapplicable. This paper addresses the problem of sensor substitution in non-prehensile manipulation. We propose a novel machine learning-based framework that enables a robot with a limited sensor set (e.g., LiDAR or RGB-D camera) to effectively perform tasks previously reliant on a richer sensor suite (e.g., tactile skin). Our approach learns a mapping between the available sensor data and the information provided by the substituted sensor, effectively synthesizing the missing sensory input. Specifically, we demonstrate the efficacy of our framework by training a model to substitute tactile skin data for the task of non-prehensile pushing using a mobile manipulator. We show that a manipulator equipped only with LiDAR or RGB-D can, after training, achieve comparable and sometimes even better pushing performance to a mobile base utilizing direct tactile feedback.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge