A Linear Bandit for Seasonal Environments

Paper and Code

Apr 28, 2020

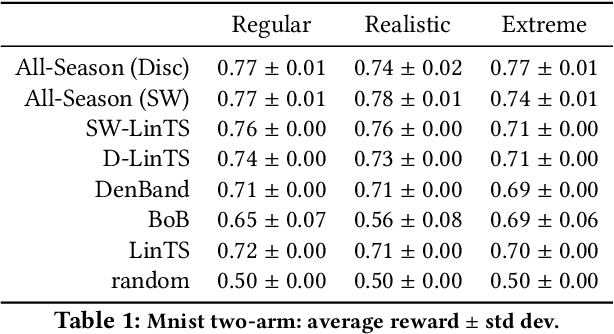

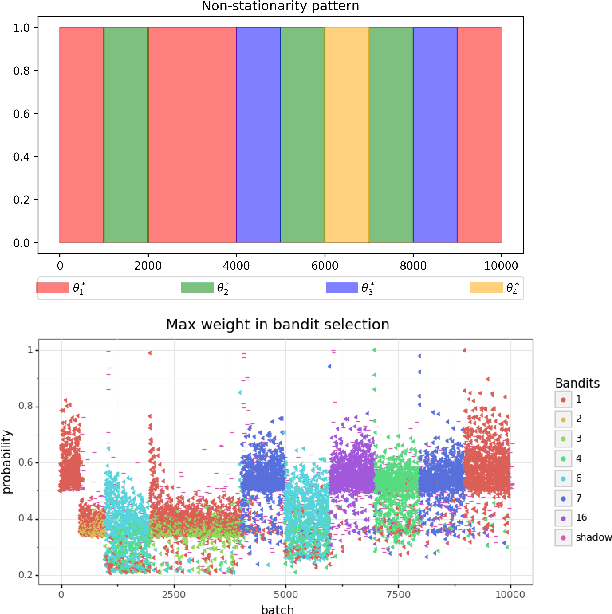

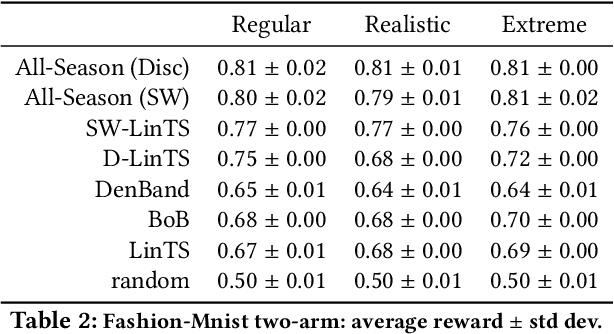

Contextual bandit algorithms are extremely popular and widely used in recommendation systems to provide online personalised recommendations. A recurrent assumption is the stationarity of the reward function, which is rather unrealistic in most of the real-world applications. In the music recommendation scenario for instance, people's music taste can abruptly change during certain events, such as Halloween or Christmas, and revert to the previous music taste soon after. We would therefore need an algorithm which can promptly react to these changes. Moreover, we would like to leverage already observed rewards collected during different stationary periods which can potentially reoccur, without the need of restarting the learning process from scratch. A growing literature has addressed the problem of reward's non-stationarity, providing algorithms that could quickly adapt to the changing environment. However, up to our knowledge, there is no algorithm which deals with seasonal changes of the reward function. Here we present a contextual bandit algorithm which detects and adapts to abrupt changes of the reward function and leverages previous estimations whenever the environment falls back to a previously observed state. We show that the proposed method can outperform state-of-the-art algorithms for non-stationary environments. We ran our experiment on both synthetic and real datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge